Heads up: Our Ideas Factory has been refreshed, levelled up, and grown-up into Alphero Intelligence. Some of our old posts are pretty cool tho'. Check this one out.

- What data can we extract from a Pinterest board that can be used to filter search results, or inform new product ideas?

- Image information: Who gives you more? Pinterest or Google?

- We’re going to need a load more training material to teach machines about Scandi design.

Pinterest self-identifies as a visual discovery engine. It has long established itself as the go-to platform for inspiration boards — whether you’re painting a portrait, designing a costume, or decorating your home. There are over 200 billion pins saved on Pinterest; two-thirds of which are related to brands and products. It’s a haven for product discovery.

So, naturally, we wanted to see what data we could extract from a Pinterest board to inform new product ideas.

From inspiration boards to real-world purchases

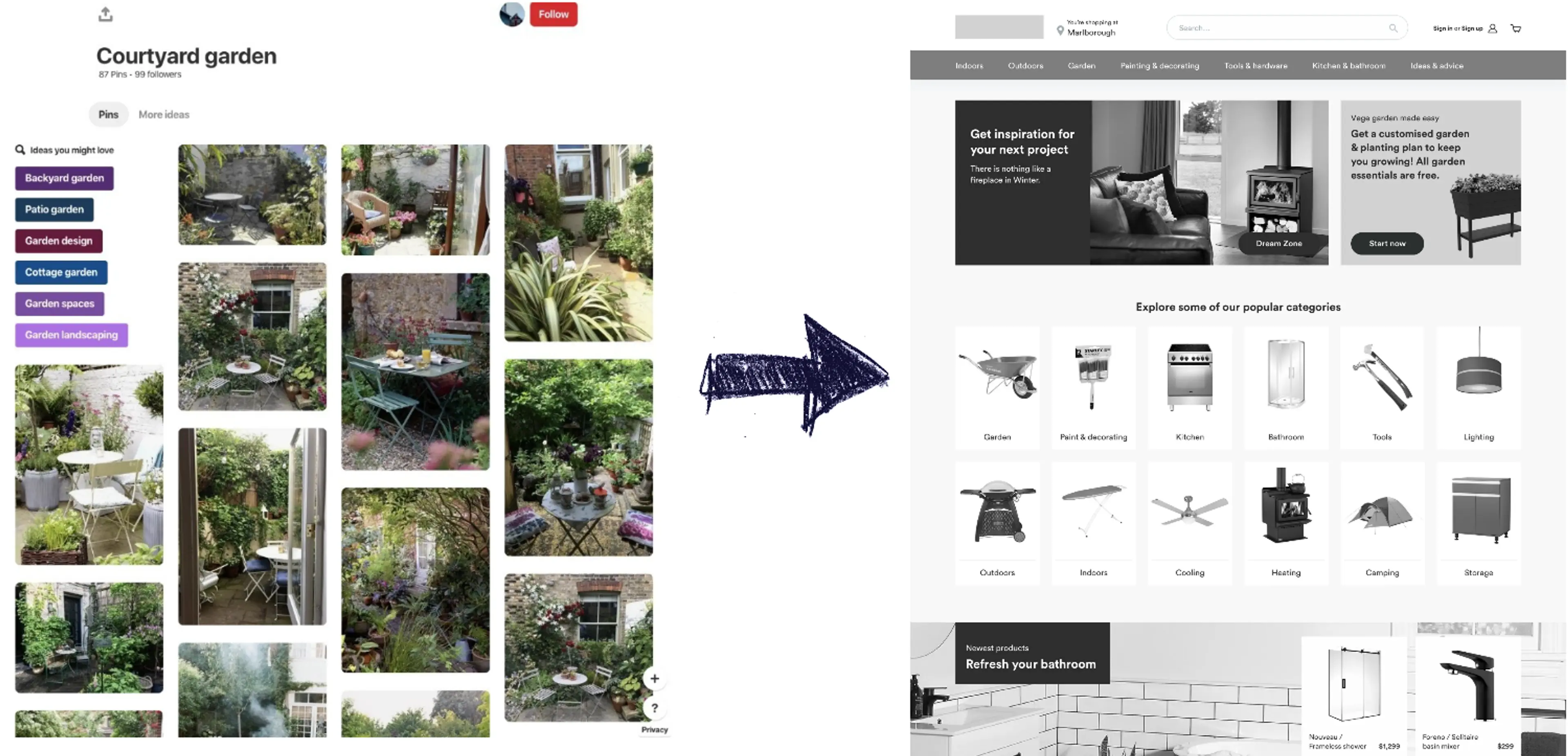

Could we train a machine learning model on a particular Pinterest board, to find our inspiration items at a hardware or furniture store?

What information can we get straight from Pinterest?

There’s a limited amount of data that the Pinterest API will return.

As you can see, most of the interesting contextual data is in the notes. If we analyse the notes of each Pin pinned to a Pinterest Board, surely we can get some interesting stuff back, right?

This gives us way more insight into some of the keywords commonly associated with the Board and its Pins, but the information that is really relevant is buried into general classification which is complex to sift through.

What can we discover about an image with Google Cloud Vision?

Compared to the surface-level data generously provided to us by Pinterest themselves, it would be fair to hypothesise that we could derive more detailed insights using Google Cloud’s pre-trained machine learning models.

We had way more success extracting data from individual pins than from entire Pinterest boards. At a Pin-level, it was much easier to extract a colour palette and objects from a photo using computer vision. But these labels and object classifications are still way too broad to accurately use for online shopping purposes. Chair? Plant? Table? Well, yeah…

What else could we do with Pinterest?

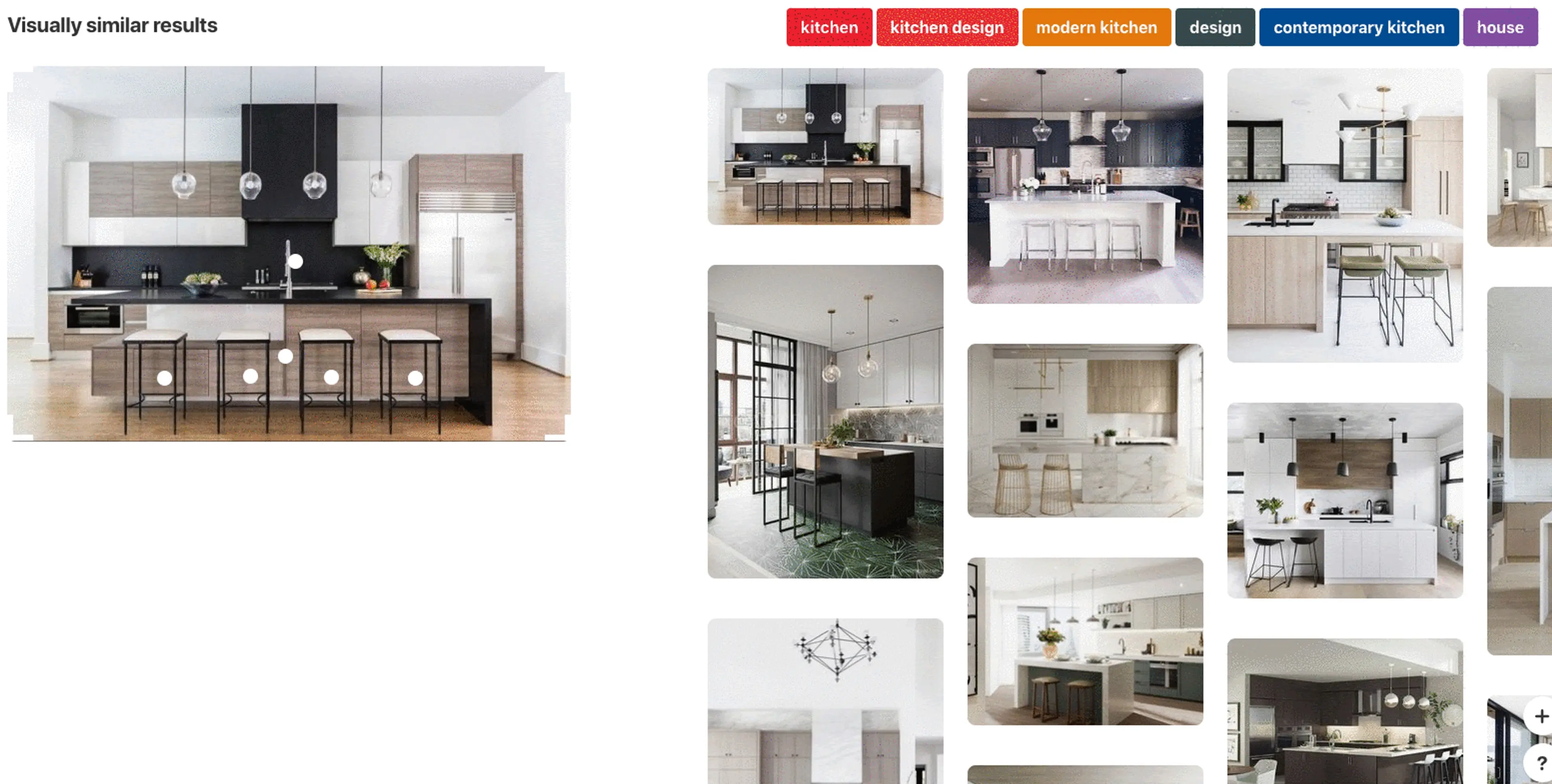

Pinterest has its own tool for finding visually similar results, but it also has its mishaps.

If we have a detailed enough image, consisting of a number of objects, we can use the Pinterest API to extract more granular data.

But as for taking those labels and chucking them into online stores as search queries?

It doesn’t go well.

Machines can recognise things, but just can’t categorise them accurately with the limited amount of training we were able to do based on Pinterest. Sometimes the style is more relevant than the literal object — for example, Scandinavian design. We can return more meaningful results via computer vision than we can with frequency analysis, but it needs refinement. Iterating parts of images over and over might also yield better results. We’re going to need a load more training material to teach machines about Scandi.

Regardless, even if we can get perfect information from an image, it doesn’t matter if our catalogue doesn’t return results based on that information. Search performance is important and proper tagging within a catalogue will dictate the meaningfulness of results. That’s our takeaway.