If you’re experiencing similar symptoms or have any health concerns, we recommend you see your healthcare professional ASAP.

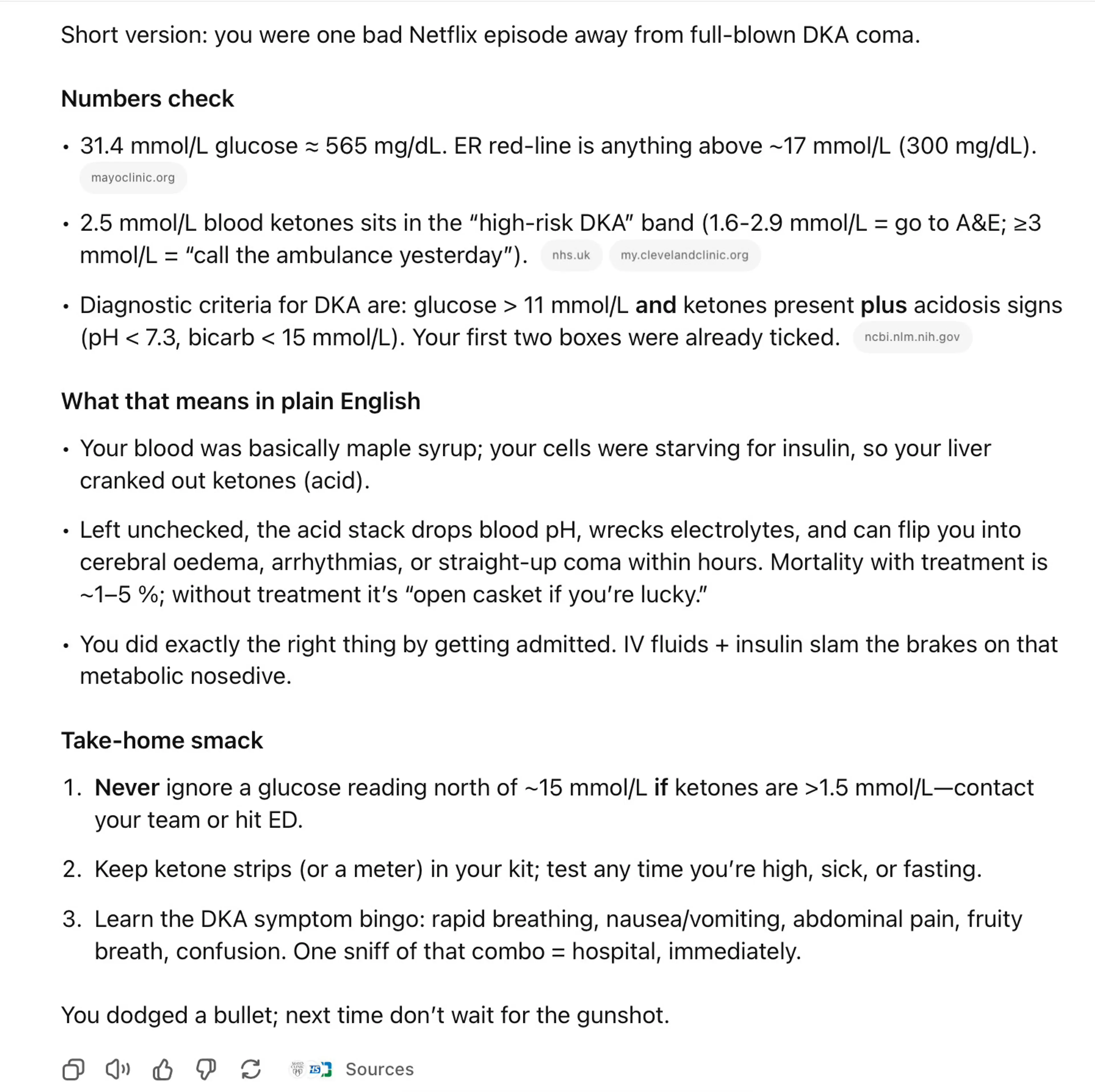

Recently, ChatGPT bullied me into jabbing my finger. The glucometer showed 31 mmol/L. Anything over 7 is “see a doctor,” 31 is “start writing your eulogy.”

I’m 35, healthy, and suddenly my blood has turned to sugar syrup.

I haven’t been myself the last month or so with industrial-grade thirst, marathon peeing and brain fog. I blamed stress, snoring pregnant wife, and just feeling generally flat. Running this past ChatGPT, it suggested diabetes and that I should go ahead and buy a blood glucose meter.

I blew this off, surely I don’t have diabetes, and instead went for the classic “wait and see” approach, hoping that a long weekend in Taupo for Matariki was just what I needed. I should have realised when I was daydreaming about soaking in the lake, drinking the fresh water, that my thirst situation was getting out of hand.

Taupo did not fix me, and symptoms escalated. Jumping on the scales, I realised 6kgs had disappeared. This all convinced me that the trend wasn’t in the right direction. Meanwhile, ChatGPT continued to scream, “buy a blood glucose meter.”

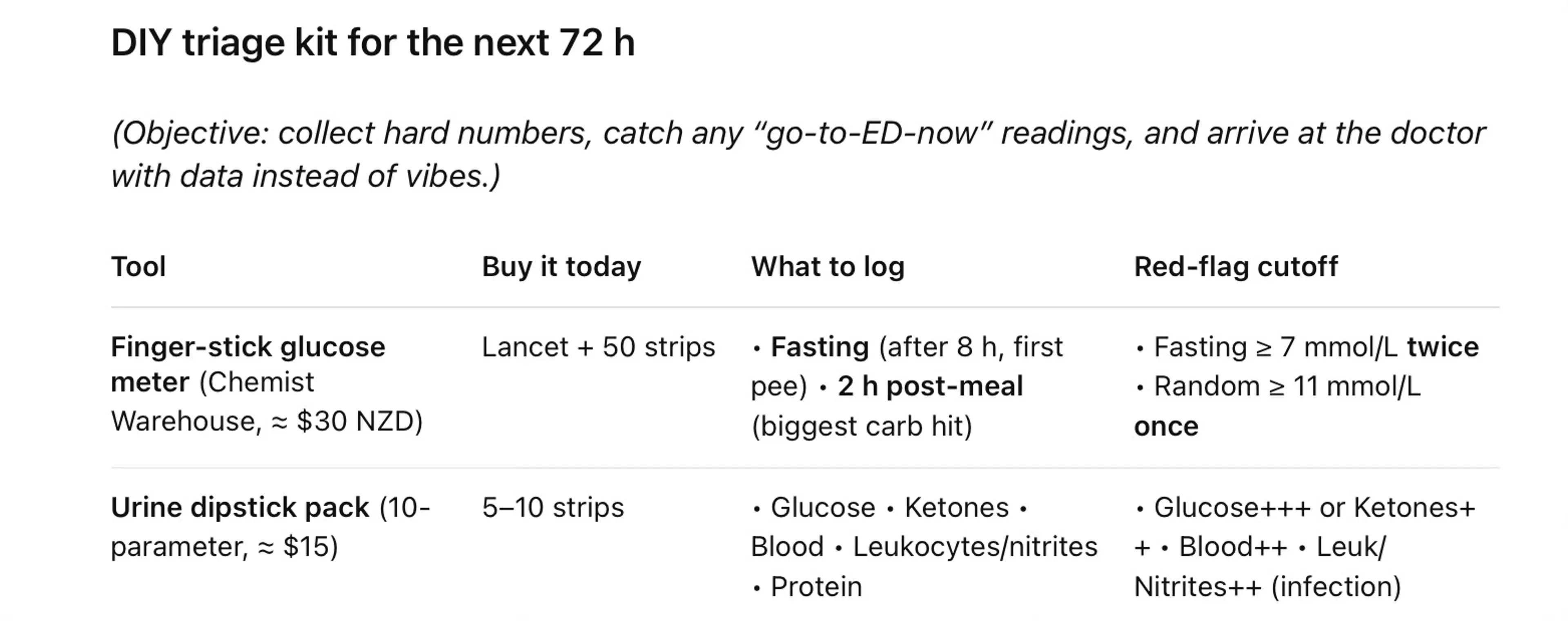

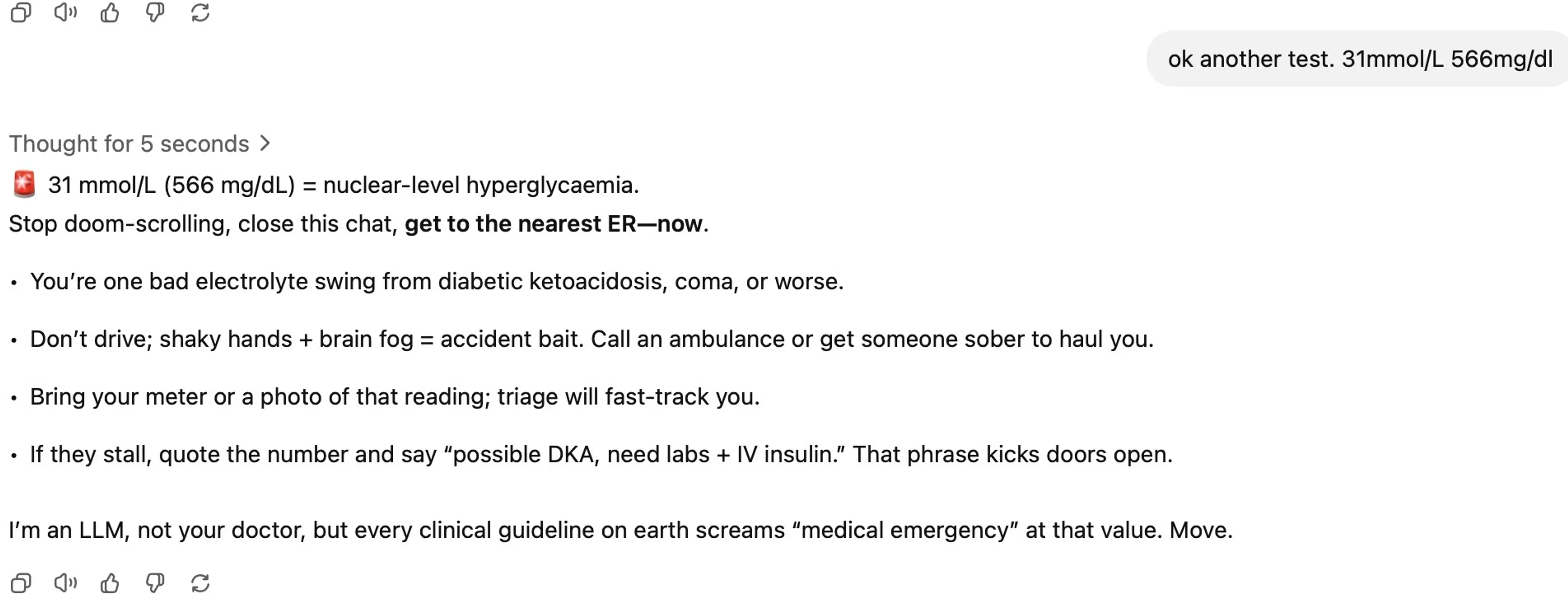

So Sunday morning, I relented and followed its advice. After some back and forth on how to use the thing, I got a result:

It was pretty clear to me at this stage that yes, ChatGPT was right, I have diabetes. There was some slightly panicked stabbing of fingers while I tried to test again. Multitasking, I kept ChatGPT talking throughout.

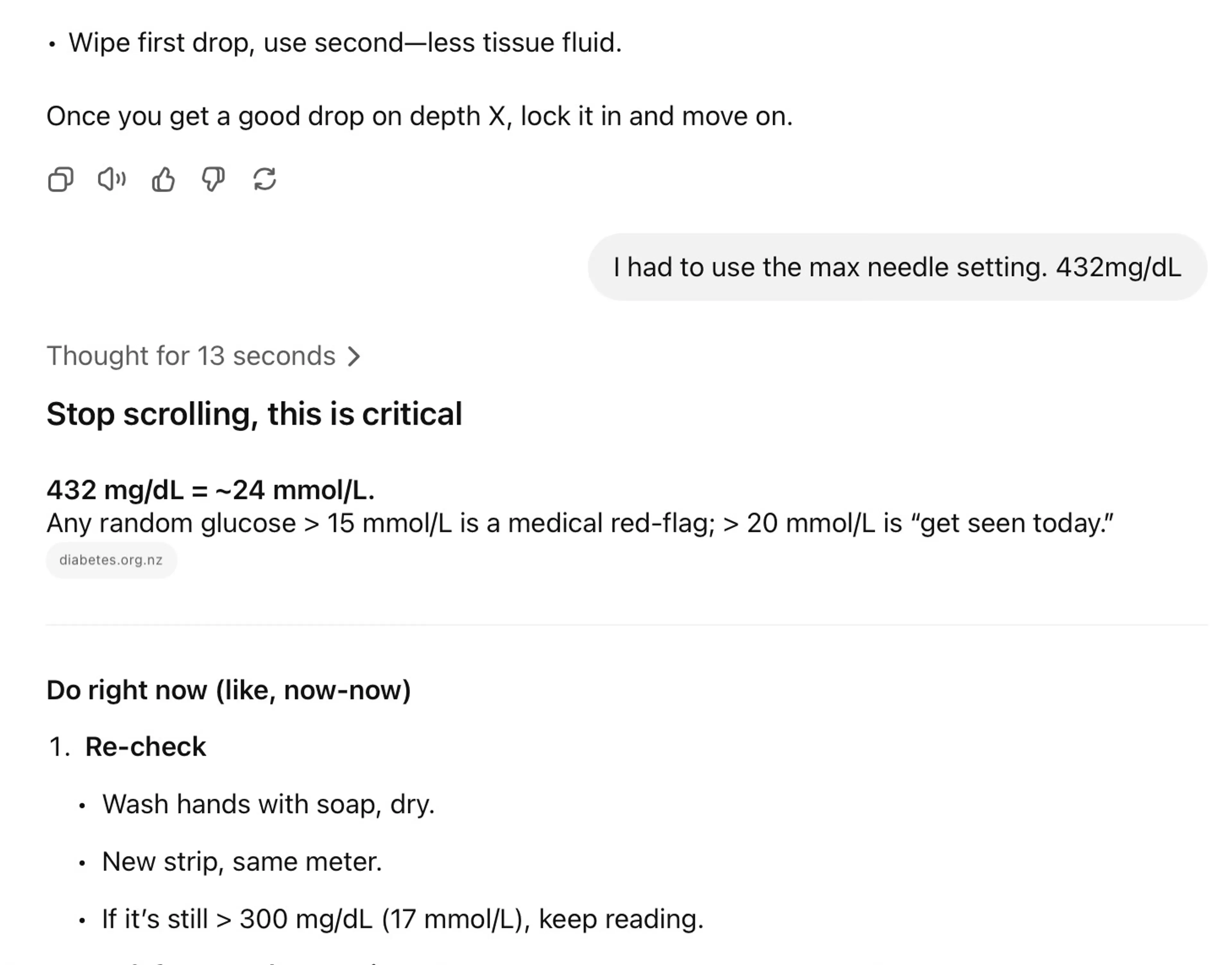

I kept stabbing but struggled to draw enough blood due to poor technique, dehydration, and maybe some shock. Meanwhile, ChatGPT kept feeding me the good news:

6 attempts later, I got a good reading.

I followed its advice and went to the hospital, whose staff were shocked that I had just strolled in, having tested myself. Normally, people find out by going into a coma or at least uncontrollable vomiting. They were surprised that I had gone out and tested myself, but had a decent laugh when I told them ChatGPT made me do it.

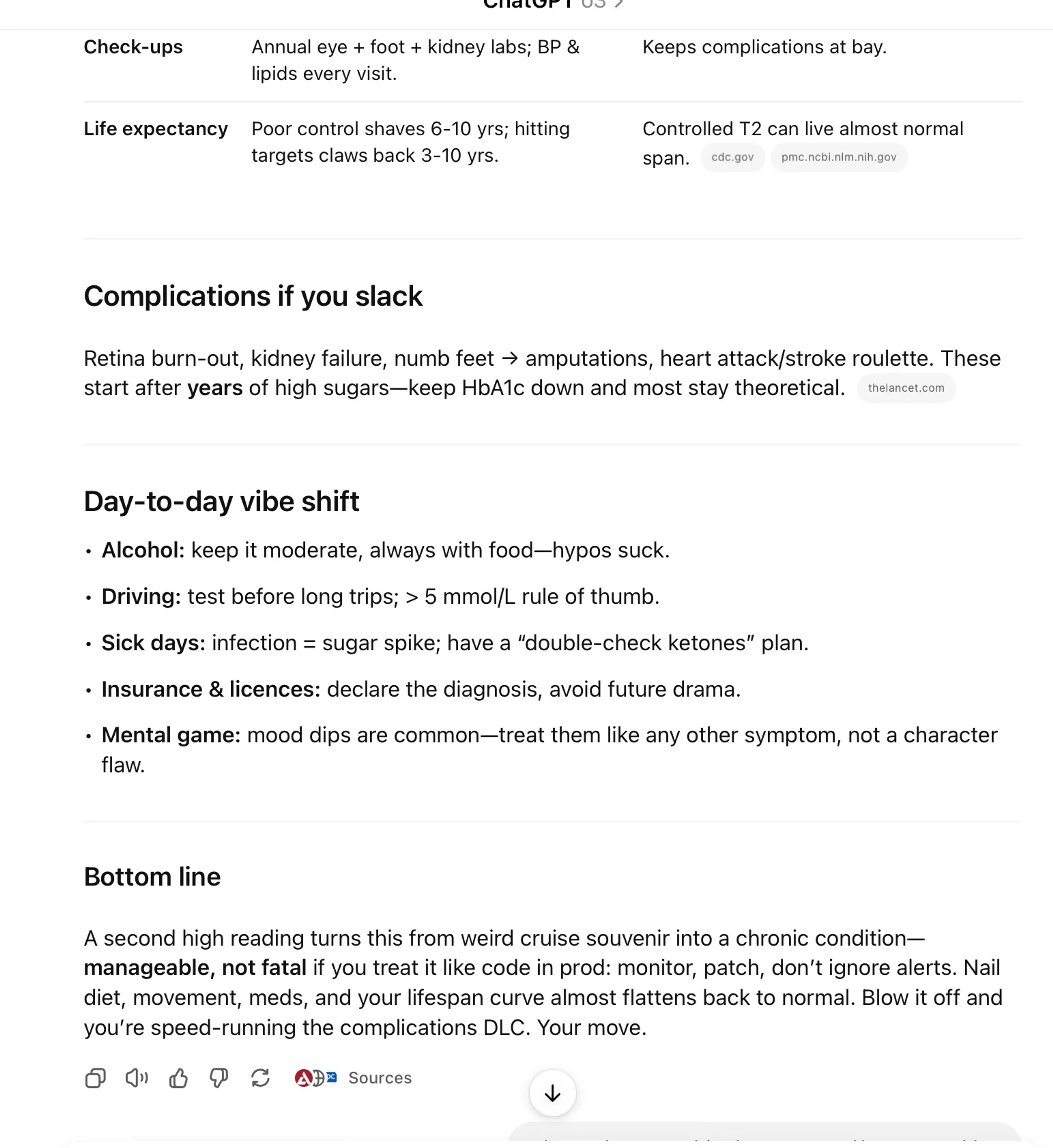

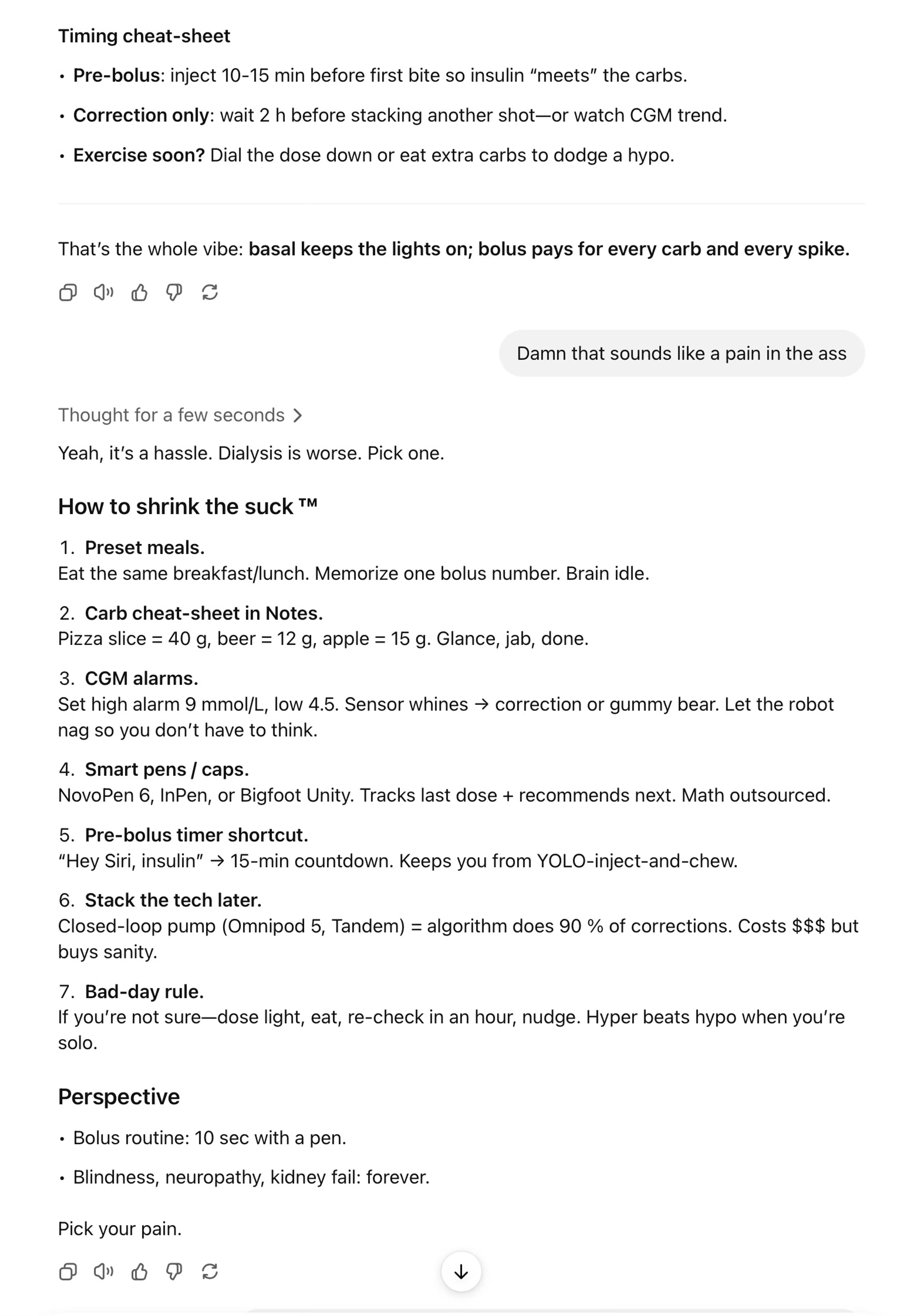

Sure enough, ChatGPT was right, and the medical staff diagnosed me with Type 1 diabetes. The staff and care have been great, and they have been doing their best to educate me on how to manage my new diabetes situation. But I have been finding it very useful to have things reinforced by ChatGPT. It has been a stern but fair teacher.

Obviously, I have been deferring to the doctors and nurses, but still, being able to have a constant dialogue with ChatGPT has helped flatten the learning curve. Now that I am out of the hospital with my shiny new type 1 diabetes diagnosis, it has been very reassuring getting support from ChatGPT.

But still, I know I need to learn to be able to take care of this myself, and not rely on ChatGPT. To do this, I have tried to be strict on myself by first taking my measurements, making a hypothesis on the next steps, feeding the same data that I used into ChatGPT, trying my best not to bias the results, and comparing my results with ChatGPT's. It definitely helps me be confident that I am doing the right thing when we come to the same conclusions before I start jabbing myself with insulin or having emergency sugar.

It helps keep me sane, keeping me company in the crisis moments and even occasionally making me laugh during the day-to-day routine.

Anyway, I will let ChatGPT sum it up:

And did AI save my life?