Resistance to change is natural. We have evolved to understand that change equals uncertainty, and uncertainty can lead to danger. Even the most risk taking people around will admit that it's a conscious process to embrace uncertainty rather than a lack of regard about the ultimate outcome. I am no different, and my saving grace is my optimism, an inbuilt belief that where there is a will, there is a way. Currently, AI is stirring up these emotions of fear and danger, aided by media sensationalism. I do believe AI is going to transform the world, but not through a Skynet-style self-awareness scenario. Rather, it will reshape our reality through gradual integration into tools and systems we use, as well as our perceptions of efficiency.

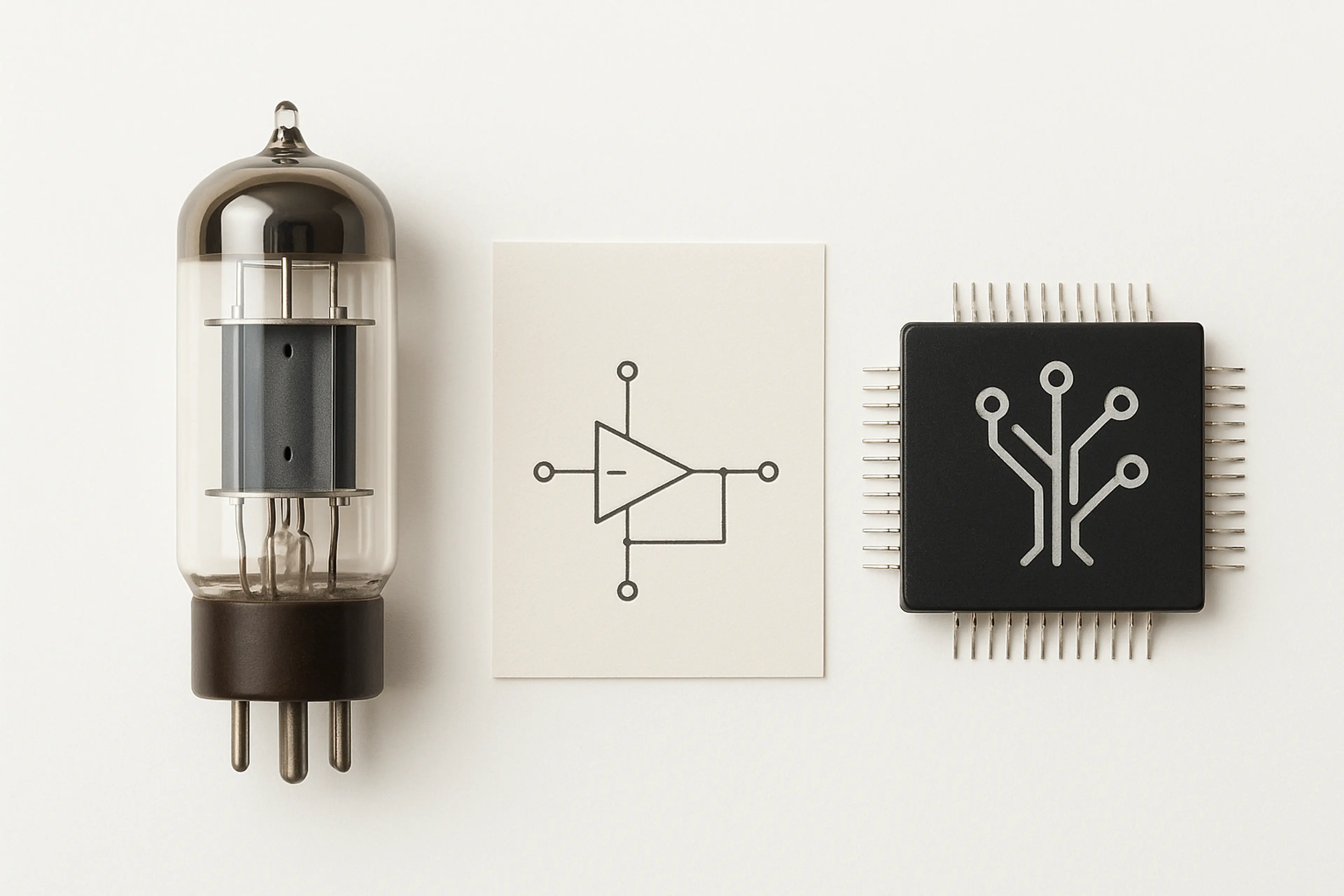

Within software development, there is a very high expectation of continuous change, probably more so than most other industries, following Moore’s law. AI's impact on people is deeply emotive, with concerns about job displacement running high. However, I see it as a continuation of the long-running trend to make the art of creating software faster and easier, ultimately enabling more creativity.

Test automation serves as an excellent example of how tools we once feared can become essential to how we work. Not long ago, automation was seen as a threat set to steal our jobs, poised to replace human testers. Then came the overcorrection, a wave of over-engineering where attempts were made to automate everything and code bloat ran rampant. But over time, the dust settled. We began to understand that automation isn't a replacement for skilled testers; it's an enabler. Today, it takes care of the repetitive tasks, giving testers more space to focus on what humans do best: exploratory testing, critical thinking, and creative problem-solving. What started as a fear became a fundamental tool in our kit.

As we explore opportunities for AI across the development lifecycle for both our test and development teams, we're trying to walk the line of ensuring AI becomes another tool to empower us rather than assuming it's the silver bullet solution to all our challenges. We keep our eyes open for the opportunities that inevitably emerge when disruptive technologies arrive.

A great example of this balanced approach is Uber's experience with using AI to write unit tests. It's an excellent starting point for AI-generated code that doesn't go into production but adds immediate value through increased test coverage and reduced regression issues. As you would expect from any developer who has been through these hype cycles, there was immediate scepticism: How do you know if the tests being written are valid? Increasing coverage can also lead to code bloat! These are all valid questions that good developers instinctively ask. These questions help us think more critically about our solutions and hold them to higher standards.

If we're concerned about test validity, we can put a human in the loop to code review the tests, or even better, validate tests by deliberately breaking code. Ultimately, tests exist to ensure code maintains its integrity and functionality.

As we write more tests faster, we create larger and larger suites of tests. This is code that needs to be maintained. But once we have a framework for testing the validity of tests, AI can then optimise the test suite—increasing reuse and improving performance. The best part is that these tools don't just work on AI-generated code; they also validate human-written tests and can even optimise them. By critically questioning the validity of AI-generated tests and building in self-validation, you inadvertently build tools that support better human-written tests as well, creating a system that can validate and optimise tests with remarkable efficiency.

What makes Uber's approach particularly noteworthy is how they've maintained the human element at the centre of their processes. Rather than blindly implementing AI-generated solutions, they've created collaborative workflows where AI suggestions work alongside human expertise, enhancing the broader system rather than replacing it.

Perhaps the most fascinating aspect of technological change is how often the most valuable applications emerge from unexpected places. The scepticism that prompted Uber to build validation frameworks for AI-generated tests ultimately created tools that improved all testing, whether human or machine-created. The original concern "How do we know these tests are valid?" led to systems that benefit their entire development ecosystem.

This pattern repeats throughout technological history. The initial use cases we imagine often pale in comparison to what emerges once a technology is integrated into our workflows and subjected to real-world challenges. By remaining open to these unexpected applications while maintaining a critical eye, we position ourselves to capture value that might otherwise remain hidden.

Our relationship with technology is not one of replacement but of renaissance. Each tool that handles the mundane creates room for the magnificent, the exploratory testing that uncovers edge cases, the creative solutions that emerge from human intuition, the critical insights that come from years of experience wrestling with complex problems. Technology may be evolving at exponential rates, but our capacity to think differently, to question assumptions, and to find novel applications grows alongside it.

The future belongs not to those who implement tools fastest, but to those who integrate them most thoughtfully, transforming initial uncertainty into sustainable advantage through the simple act of refusing to stop thinking critically about the world we're building.