Heads up: Our Ideas Factory has been refreshed, levelled up, and grown-up into Alphero Intelligence. Some of our old posts are pretty cool tho'. Check this one out.

- Complex forms can instigate device hopping as users gather documents to submit information.

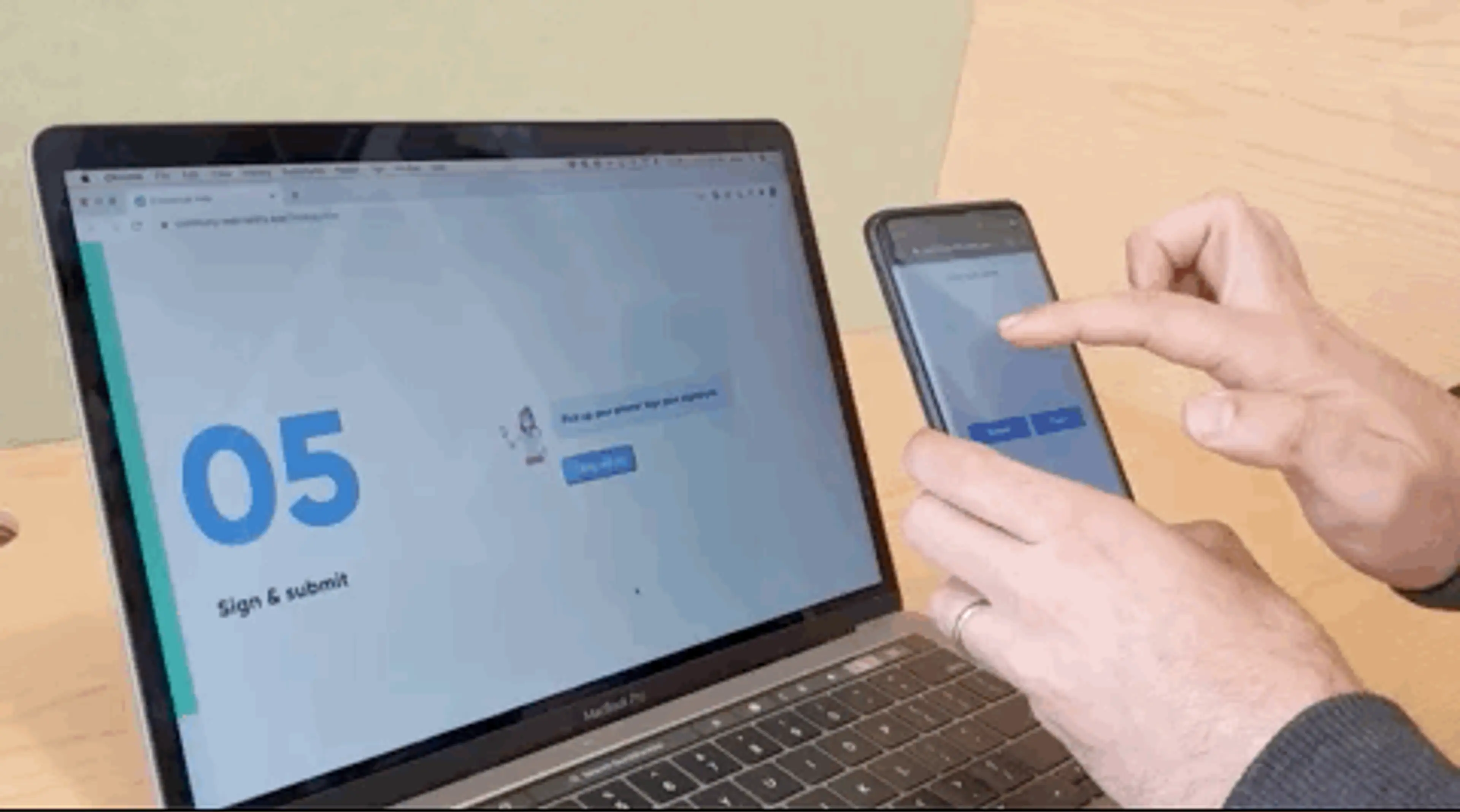

- We created a prototype that can link multiple devices so they can provide information into a single form.

- The prototype expanded to use other phone capabilities that can be used to provide extra information to a form.

As part of our never-ending quest (hampered by AML-grade identity verification requirements) to make customer onboarding a thing of intuition and joy (hey, we can have a nerdy nirvana!), a ‘how might we reimagine customer onboarding’ card is a permanent resident on our Futures Wall.

We all interact with forms on a daily basis, and more often than not we need to provide something extra like a photo or a document to prove our identity or place of residence. When this happens some sort of inevitable device dance happens - you might need to grab your phone, take a photo of a bill or find the document you downloaded last week on your phone, email it to yourself, then go to your desktop computer, download it and then add it to the form.

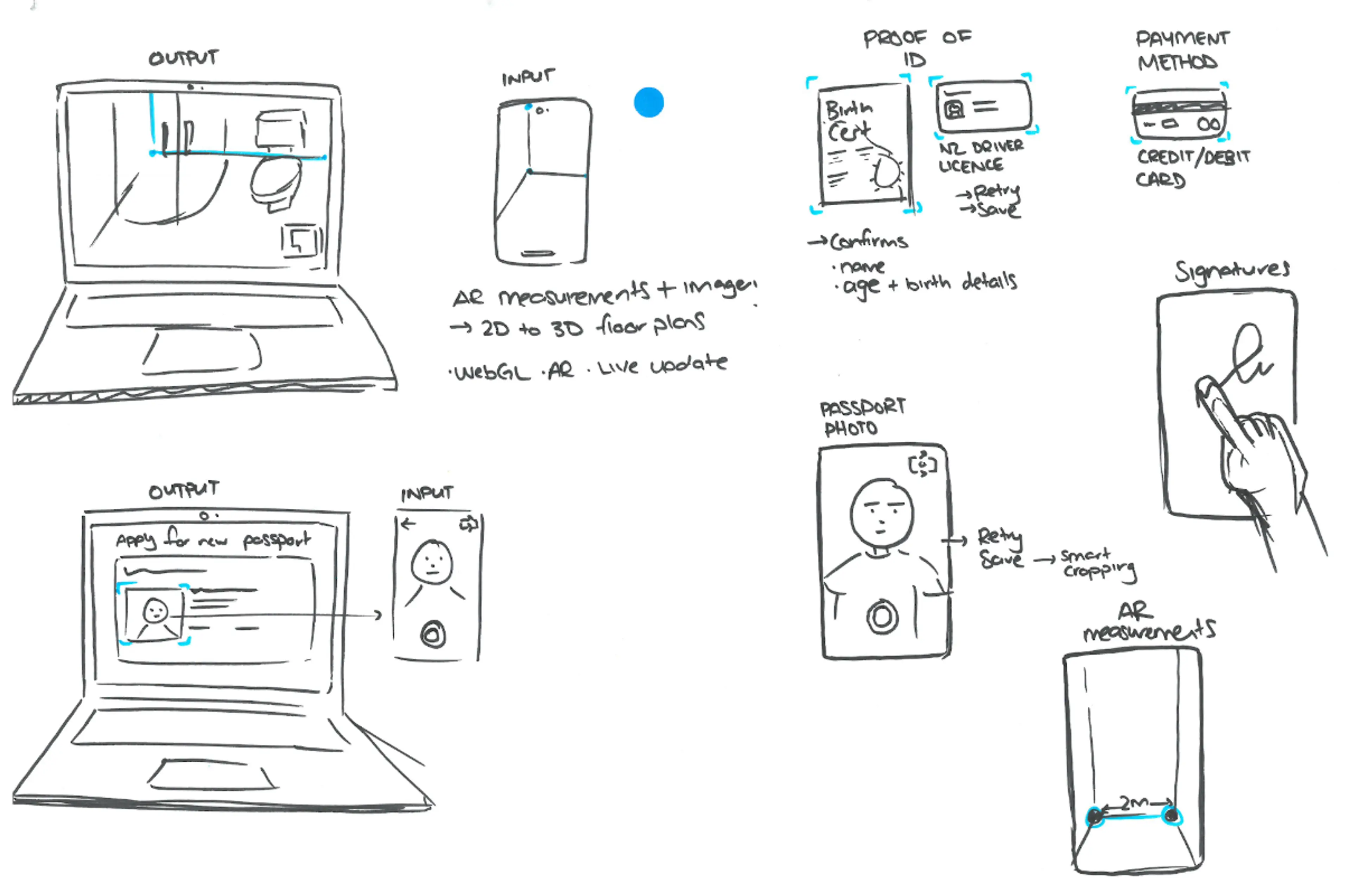

The ideation process

The team was given a day to rapidly ideate different ways we could help customers complete a complex ‘join’ process. Nothing was off the table. Some of the (parked) ideas included: using wearables to detect an increased heart rate indicating stress during form completion; and motion detection so that a user could put their hand up and ask for help.

The idea

The idea we liked the most, became what we initially called Companion Form. It has since been renamed FORMidable by our Ideas Factory manager. Because who doesn’t like a good pun.

The idea is based around the scenario of complex online registration or sign-up flows where users must scan and upload a range of items (usually) to prove their identity, but this could be for other purposes. These could include:

- Drivers licences or passports

- Utility bills (for proof of address and location, usually validated against an IP address)

- Proof of life (‘liveness’ tests are increasingly common in onboarding flows for government agencies or banks)

- A scanned signature

- Anything else we could think of!.

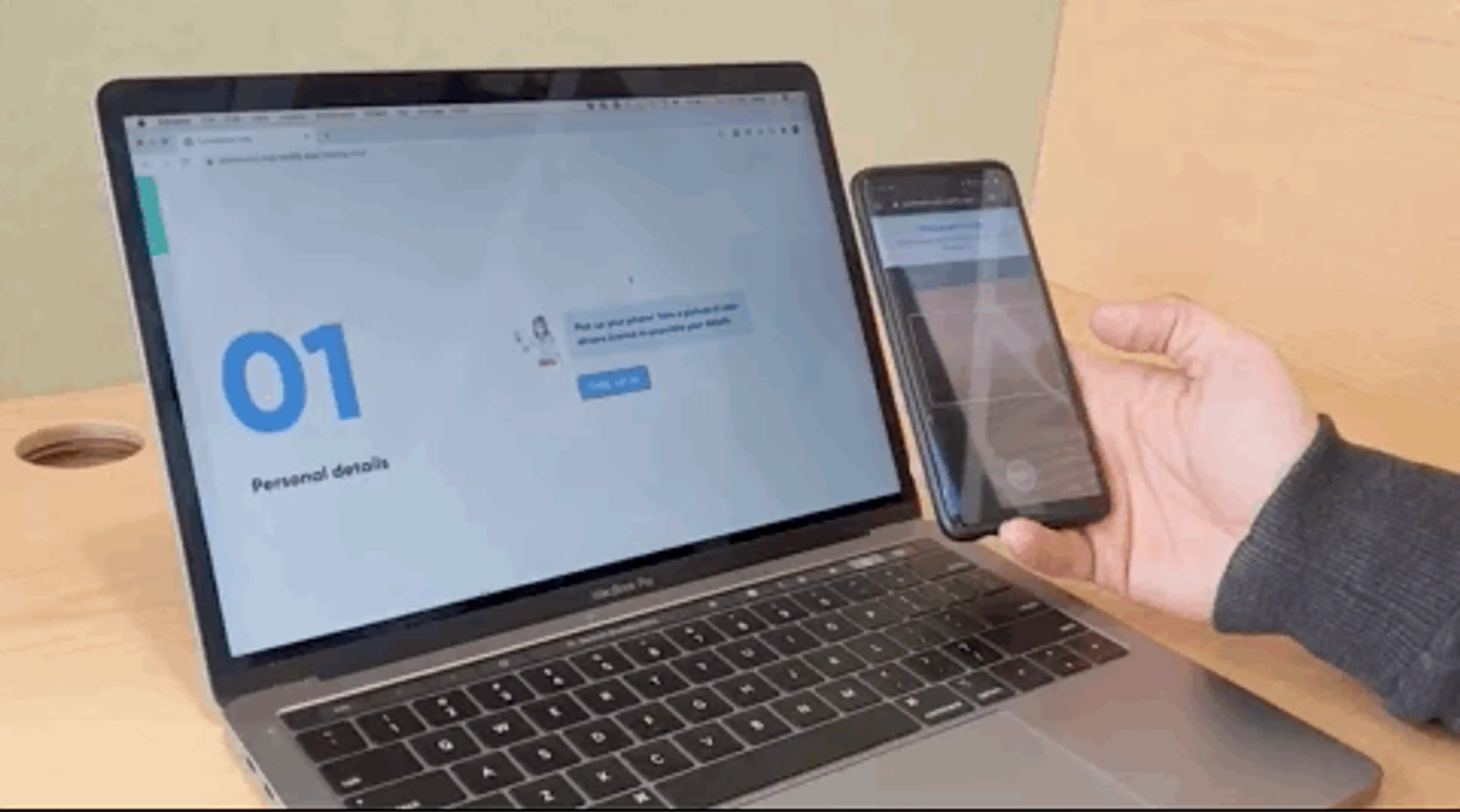

What if we could simplify this process by pairing your desktop with a phone when you start the form, and the phone screen changes state as you work through the form?

For example, where a driver's licence scan is required, the phone screen transitions to camera mode with a frame for the licence. You scan and hit upload, and the image appears immediately on the desktop in the form flow.

Our team created a prototype that showcased pairing your computer and your mobile phone via your browser and sharing information between both so you can use your phone’s capabilities while completing complex forms on your computer.

Getting the conceptual design right was the initial challenge

We had a vision for the type of experience we wanted. It needed to be completely intuitive and seamless. Take the heavy lifting away for the user. The designers initially concepted based on a range of more ‘traditional’ form patterns. Unfortunately this just didn’t feel intuitive enough. There was too much going on on the screen, and it wasn’t clear when you needed to glance at the phone. The developers initially struggled to understand what we were trying to achieve which wasn’t a great start!

We decided to pivot the design approach and rethink the form experience completely. We found that making a single task (at a time) the focus of both desktop and mobile views solved the problem. This allowed us to introduce an interaction pattern that prompted the user each time there was a task to complete on the phone.

We also found it was easier to concept and prototype around a particular signup scenario. In this case: registering for the great Alphero Quiz. Which obviously requires extensive proof of your identity and proof of liveness! We take our quizzes seriously.

We produced a prototype

Once the designers nailed the concept, the developers were off and running.

Create the connection between devices

The first step was creating the initial connection between the devices, which could be triggered via the user scanning a QR code on the site. To get this going, we used a technology available out of the box in all modern browsers - WebRTC. This technology enables voice, video and data to be exchanged between two web browsers without the need for complicated backend APIs, or a mobile app.

Have fun getting the user to upload all sorts of things from their phone

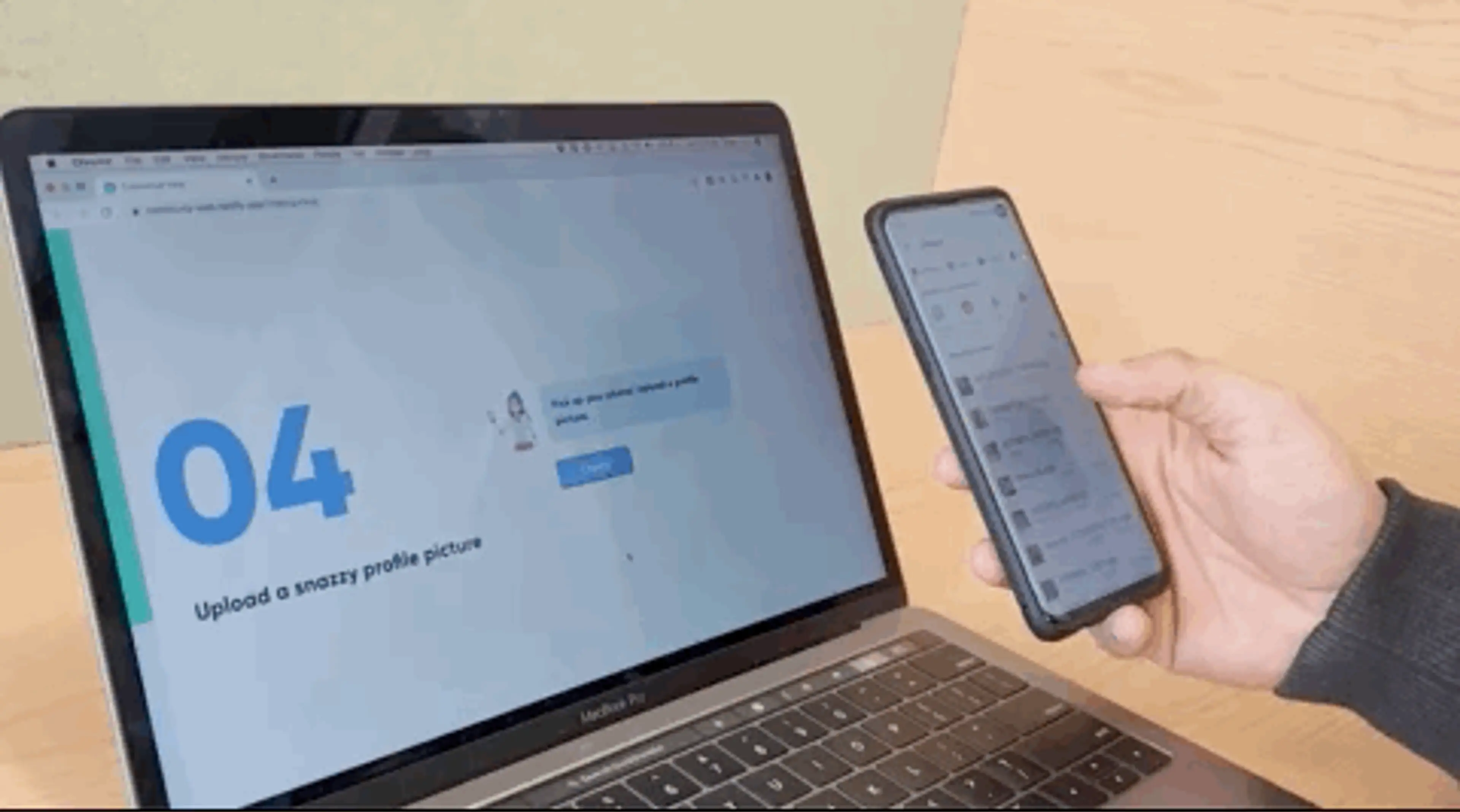

In our prototype, once that was done we then stepped the users through a sign-up form where we asked for a wide range of inputs from the mobile phone.

The obvious first step was to collect the user’s details. The phone changes state to the camera view, and we prompt them to take a photo of their driver’s licence, send it back to the browser and process it using OCR capability to auto-complete the form. The user can verify or change the details if needed.

Once we did that, we realised we could use our phone for much more including for liveness detection (are you a human, a bot or an imposter), location verification, adding photos, adding a contact and even the touch screen to add their signatures at the end of the process. We created a giant list of all sorts of things and figured we’d see how many we could prove in our prototype.

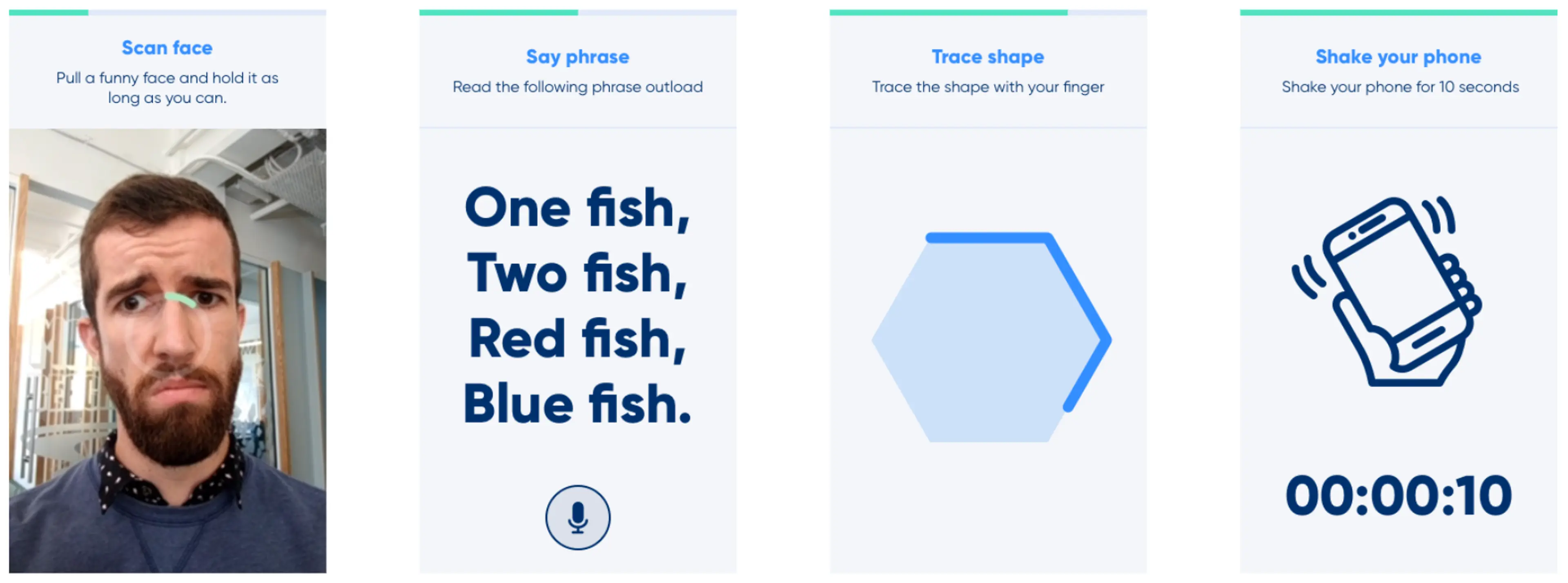

Are you human? And alive?!

Step 03 is the liveness test. AML and government identity verification best practice these days often requires users to prove that they are alive (and not a bot or uploading someone else’s passport or licence photo). We send the desktop prototype back into listening mode and fire a series of quick challenges to the user to prove there is a real human being there.

Here’s some of the things you can do to prove liveness. A facial recognition scan that detects you making expressions, asking the user to repeat a random phrase and say it into the phone, tracing a random shape, and complete their proof of life by shaking the phone for 10 seconds. We thought that’s enough…

Identity verification usually requires proof of residence or location. For New Zealand government services like RealMe or for banks you have to provide a recent utility bill (less than 3 months old) as proof of address. If this is scanned and uploaded electronically many organisations also require location coordinates of the uploading device (i.e. to do it at home).

We thought we could make this easier for the user by scanning a bill, grabbing the address using OCR, and automatically matching this against the user’s location.

And… add a signature. Because why not.

Security

The underlying technology used for our prototype is WebRTC, which by default encrypts the information being transmitted. Our resident Security SWAT team had a deeper look into this and although the basic setup covers most cases, we believe further considerations need to be made with the full solution architecture in mind.

Conclusions and musings

This experience is seriously intuitive and could actually transform onboarding workflows. We have also identified a range of other use cases for it including in a service or contact centre environment for remote support where additional information is needed. As this process only uses the phone browser, users can provide information securely without having to install an app on their phones.

The technology used in this is open source, and our team came up with approaches to plug it into other underlying systems such as CRMs or Machine Learning platforms that could provide extra capability.