Heads up: Our Ideas Factory has been refreshed, levelled up, and grown-up into Alphero Intelligence. Some of our old posts are pretty cool tho'. Check this one out.

- MediaPipe Hands is a tool from Google that uses AI to recognise and track hand movements in real time, from video input.

- This opens up numerous possibilities for interacting with your device without actually having to physically touch it.

- We think this could become extra handy (sorry) in many workplaces and even help solve for some accessibility challenges. So we had a play around with it, to see exactly what it can do.

Like everyone else, we’ve been doing a lot of deep diving into ChatGPT of late. But it’s not the only interesting AI/ML platform-as-a-service tool out there right now. There are a plethora of new ones popping up all the time, and we’ve been tinkering around to see what they can do. Here’s a look at the potential for MediaPipe Hands...

There are many scenarios where we need to interact with technology but the physical act of doing so can be at best inconvenient - interrupting our process or breaking our flow - and at worst challenging or actually impossible, in the context of certain disabilities.

In a workplace context, think of jobs that are not typically desk bound but still require you to input information into forms, make notes, or move through checklists as you work. You could be a doctor or nurse who is tending to a patient; a mechanic who is checking a car; or even a presenter wanting to move through your slides as you roam around the room while you speak.

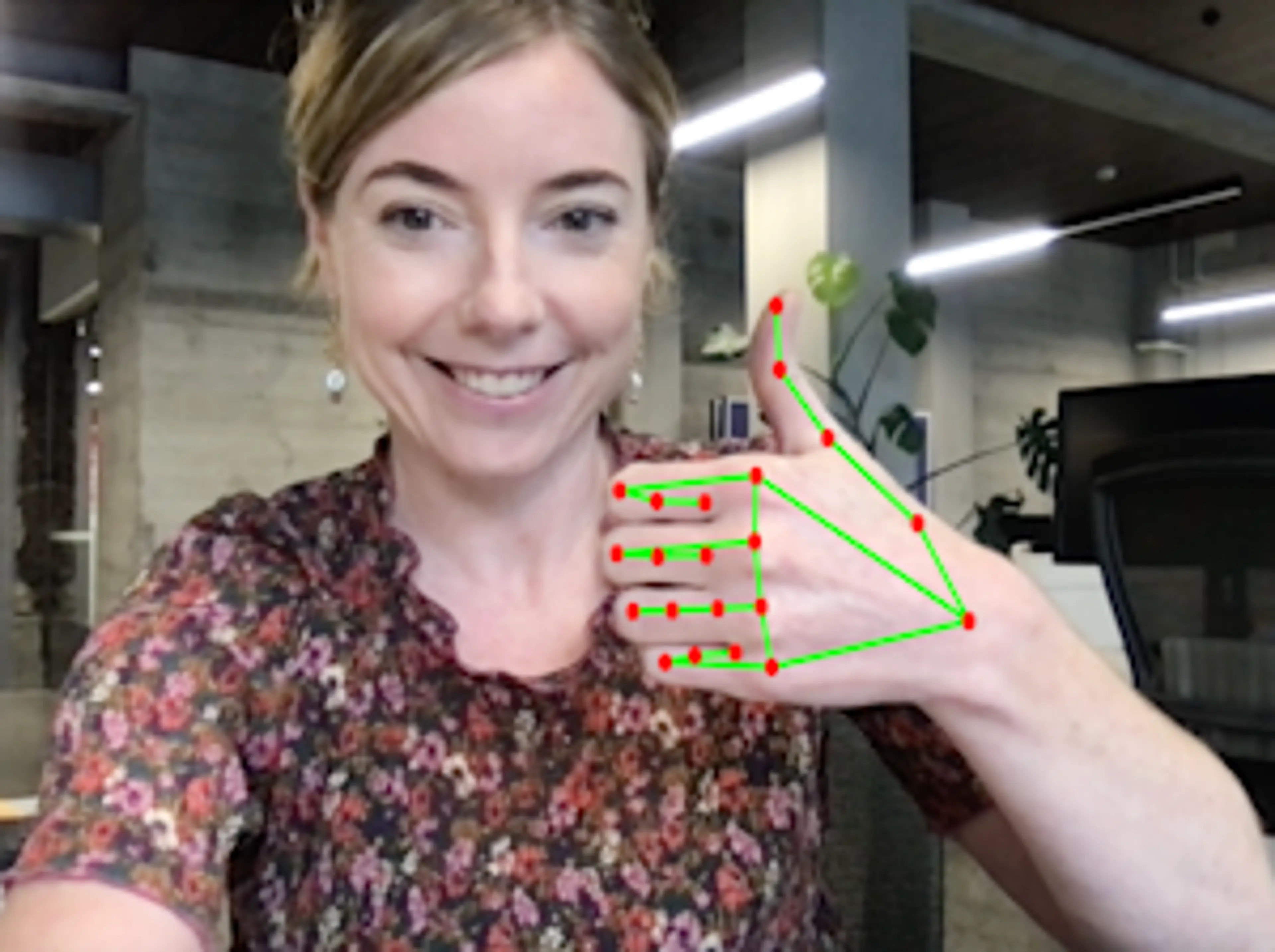

MediaPipe Hands provides high-fidelity hand and finger tracking via machine learning, and can identify 21 different points on a hand from just a single frame. It also has a Gesture Recognizer that operates on top of the predicted hand skeleton, and applies a simple algorithm which can pick up common hand gestures. These gestures can then be used to perform specific tasks on your device that intuitively correspond to them. For example, giving a simple thumbs up gesture to the camera on your device can prompt the ticking of a checkbox on the screen - without you having to touch a keyboard or mouse. Interested? Here is the dev team, giving it a whirl….

As you can see, it opens up exciting possibilities for performing digital tasks using gestures alone. Not only could this improve everyday ways of working, but it could help with accessibility too - from solving challenges with fine motor skills to translating sign language into speech or text.

Best of all, it’s developer friendly, quick to set up, extremely fast, efficient and, most importantly, very accurate for a free product. So far, we give it the double thumbs up.