Heads up: Our Ideas Factory has been refreshed, levelled up, and grown-up into Alphero Intelligence. Some of our old posts are pretty cool tho'. Check this one out.

- Artificial intelligence and machine learning work on confidence ratings, not absolutes.

- It’s not as simple as asking: “Is this a dog, yes or no?”. Because sometimes you discover that your dog is 30.1% likely to be a bird.

- Successful services using computer vision tend to be single task focused, and require a LOT of training.

Tech companies have gotten pretty good at image recognition. You could search “dog” in your Google Photos, and it will immediately return with close accuracy every photo you’ve uploaded that has a dog in it. As well as the occasional bonus cat if you’re lucky. (It’s not what I asked for, but I’m not going to complain.)

So how do computers do this?

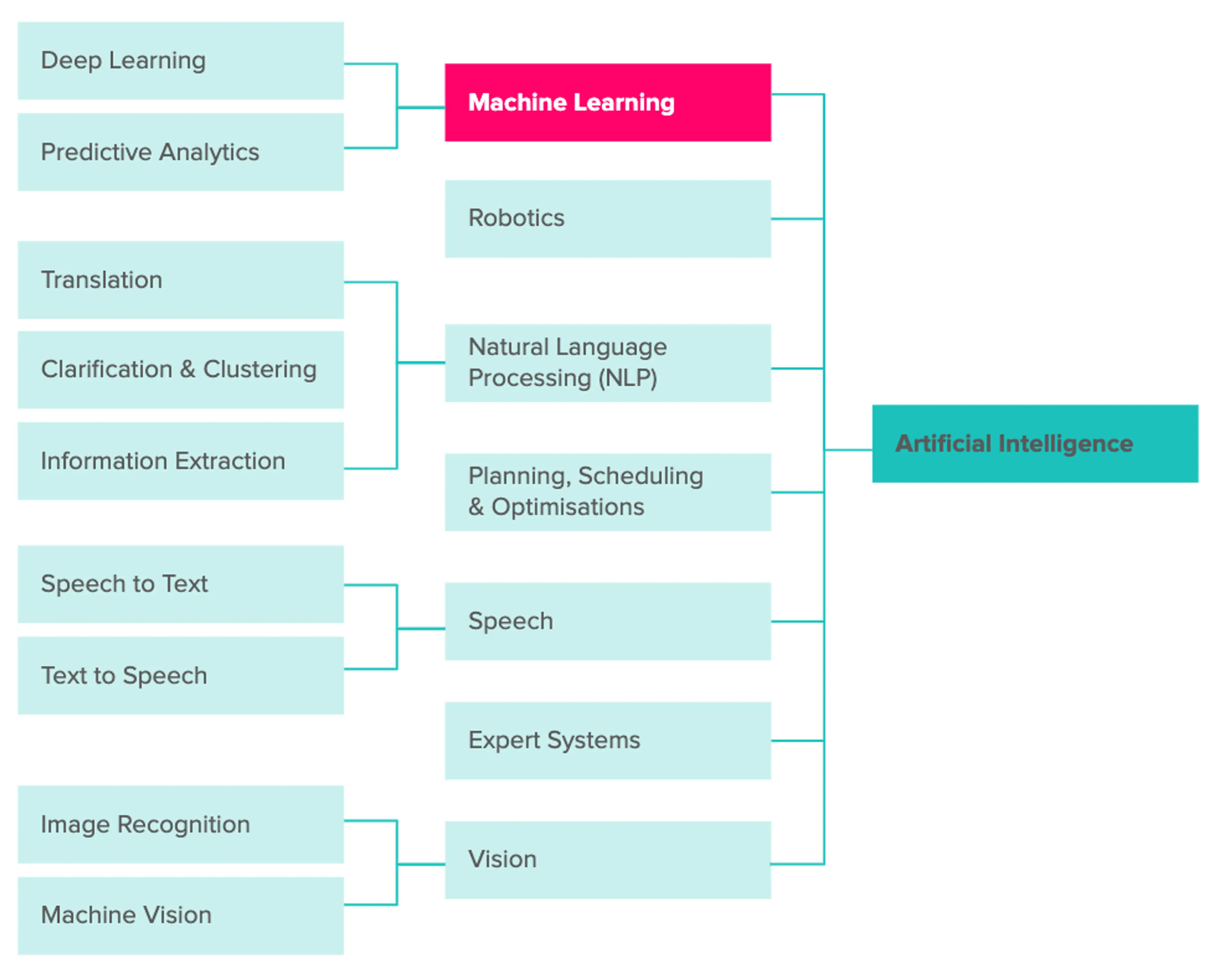

They have to learn what a dog is from scratch. This is achieved through machine learning (ML) — a core component of artificial intelligence (AI).

In order for a machine to learn what a dog is, we humans must first provide the initial training material. We at Alphero like to believe that, between us, we have a lot of dog photos. But we don’t have nearly enough to give a solid reference of a dog to a machine. For that, we had to outsource the task to Google.

Google Cloud’s Vision API comes straight off the shelf pre-trained with more dog photos than you can shake a stick at.

Powered by Google’s deep learning models, we had instant access to the thousands of classes that the cloud-based model was already trained to detect.

Google Cloud Vision’s object detection is clearly very impressive at identifying dogs (for the most part). It’s not quite confident enough to declare that there’s definitely a dog there in the last image. But, if we look at how Google is labelling it, rather than how Google is objectifying it, we can still see that Google’s about 65% confident that there’s a pug in there somewhere.

What is the computer actually seeing?

Objects? Labels? What does it all mean?

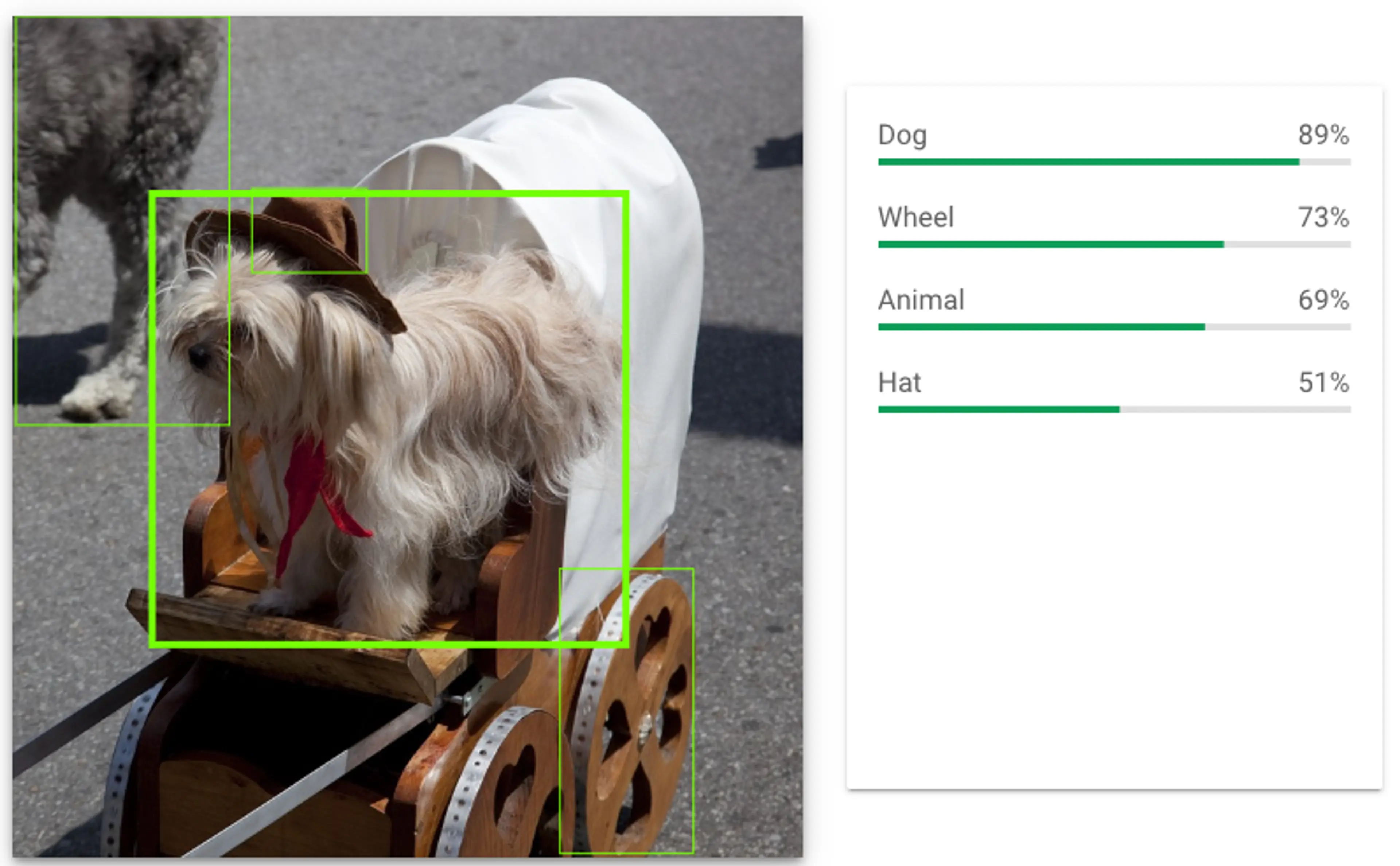

Object detection is also known as image classification. It’s what Google is surmising the photo is of. e.g. “This photo is of a dog wearing a hat.”

Labelling, on the other hand, is like a tagging system including any kind of conceptual descriptor or general attribute. e.g. “This photography has some grass in it, so there could possibly be a tree or two, likely some plant life… Hey, could this be a fawn? Fawns have fur, and hang out in nature. Because of adaptation!” Labels are far more granular, as they’re based on inference.

This is part of what we call computer vision. Computer vision is a whole thing. OCR (optical character recognition) is also a classic example. It extracts text from images, and is mostly known for its use when scanning credit cards or real-time translations using your phone camera. Just point your phone at the word “dog” and see what Google Translate spits out.

BUT, the difficulty with Google Cloud Vision is that it’s just way too overpowered for what we need. Image labelling is cool ‘n’ all, but it’s far better suited to image analysis than object detection. We’re not really looking to explore the general qualities of a particular dog (if it’s good, bad, sitting, staying); we just want the computer to recognise one if it sees one.

Playing with YOLO and COCO

After pulling all our toys out of the box to see what we had to play with, we settled into YOLO (You Only Look Once) for our real-time object detection system, pre-trained with the COCO (Common Objects in Context) dataset.

The Tiny YOLO VOC mobile-friendly model is lightweight, meaning lower latency for real-time processing, so is a great general-purpose framework. It works by splitting the image into a square grid, and then detecting items within those individual squares. Compared to other frameworks, YOLO was incredibly fast, hitting 200 FPS (frames per second) on a computer GPU. Unfortunately, however, on a phone CPU it was performing at a painfully slow 6 FPS… All the major frameworks have released mobile-friendly versions, but this still doesn’t mean that the real-time experience is going to be a smooth one.

In contrast to Google’s beast of a dataset, COCO has far fewer classes. But it has all the essentials. If all you want your computer to recognise is your dog (or any other “basic object”), COCO can get you there.

Bring out the test subjects!

So which of Alphero’s office dogs is the doggiest?

Where are we going with this?

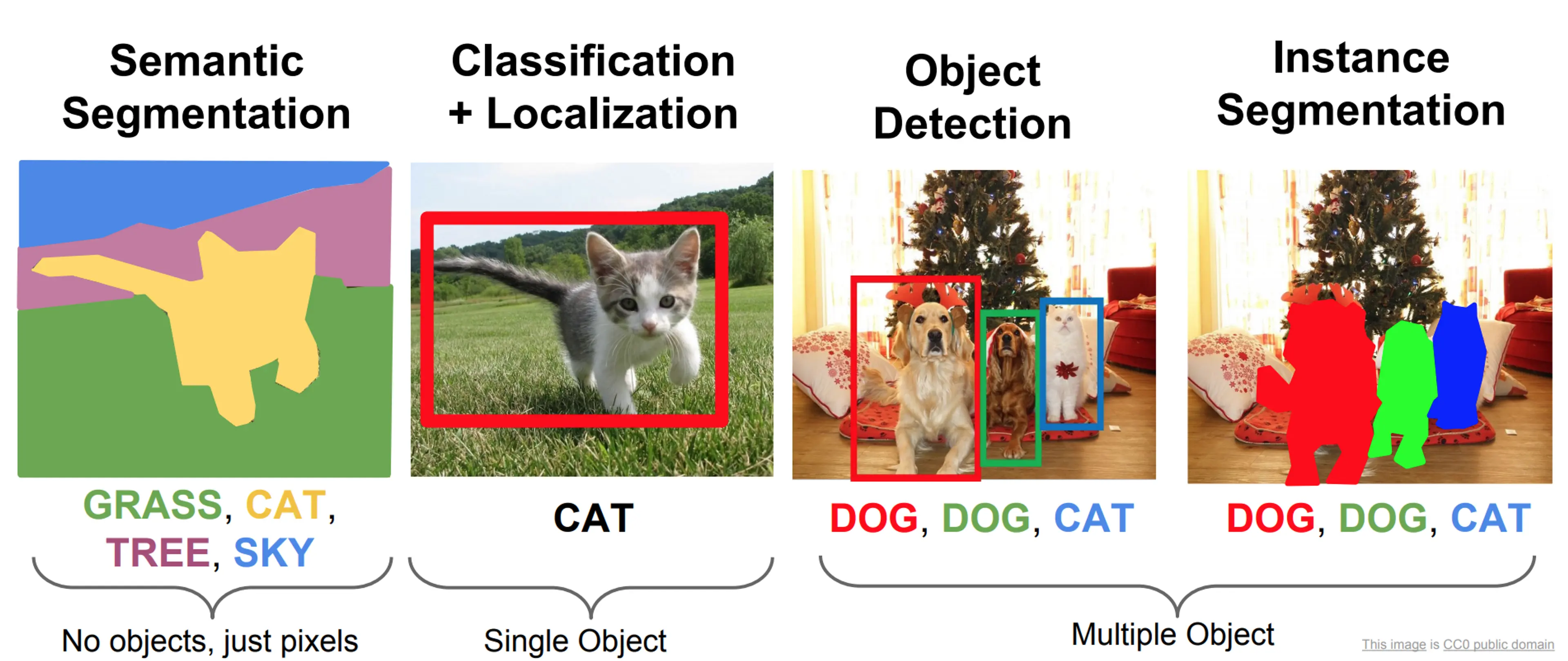

Within the coming years, there’ll be a shift away from simple “object detection” to “instance segmentation”. That’s when things will get really exciting. Basically, we’ll get away from drawing bounding boxes around objects and start colouring within the lines instead.

- Artificial intelligence and machine learning work on confidence ratings, not absolutes.

- It’s not as simple as asking: “Is this a dog, yes or no?”. Because sometimes you discover that your dog is 30.1% likely to be a bird.

- Successful services using computer vision tend to be single task focused, and require a LOT of training.

Tech companies have gotten pretty good at image recognition. You could search “dog” in your Google Photos, and it will immediately return with close accuracy every photo you’ve uploaded that has a dog in it. As well as the occasional bonus cat if you’re lucky. (It’s not what I asked for, but I’m not going to complain.)

So how do computers do this?

They have to learn what a dog is from scratch. This is achieved through machine learning (ML) — a core component of artificial intelligence (AI).

In order for a machine to learn what a dog is, we humans must first provide the initial training material. We at Alphero like to believe that, between us, we have a lot of dog photos. But we don’t have nearly enough to give a solid reference of a dog to a machine. For that, we had to outsource the task to Google.

Google Cloud’s Vision API comes straight off the shelf pre-trained with more dog photos than you can shake a stick at.

Powered by Google’s deep learning models, we had instant access to the thousands of classes that the cloud-based model was already trained to detect.

More person than dog. Yep. Sure. Source Pixabay

Who's a good cowboy? Source pxhere

Erm... I guess? Source pxhere

Google Cloud Vision’s object detection is clearly very impressive at identifying dogs (for the most part). It’s not quite confident enough to declare that there’s definitely a dog there in the last image. But, if we look at how Google is labelling it, rather than how Google is objectifying it, we can still see that Google’s about 65% confident that there’s a pug in there somewhere.

Labelled as "somewhat puggy". Source pxhere

What is the computer actually seeing?

Objects? Labels? What does it all mean?

Object detection is also known as image classification. It’s what Google is surmising the photo is of. e.g. “This photo is of a dog wearing a hat.”

Labelling, on the other hand, is like a tagging system including any kind of conceptual descriptor or general attribute. e.g. “This photography has some grass in it, so there could possibly be a tree or two, likely some plant life… Hey, could this be a fawn? Fawns have fur, and hang out in nature. Because of adaptation!” Labels are far more granular, as they’re based on inference.

This is part of what we call computer vision. Computer vision is a whole thing. OCR (optical character recognition) is also a classic example. It extracts text from images, and is mostly known for its use when scanning credit cards or real-time translations using your phone camera. Just point your phone at the word “dog” and see what Google Translate spits out.

OCR in action

BUT, the difficulty with Google Cloud Vision is that it’s just way too overpowered for what we need. Image labelling is cool ‘n’ all, but it’s far better suited to image analysis than object detection. We’re not really looking to explore the general qualities of a particular dog (if it’s good, bad, sitting, staying); we just want the computer to recognise one if it sees one.

Playing with YOLO and COCO

After pulling all our toys out of the box to see what we had to play with, we settled into YOLO (You Only Look Once) for our real-time object detection system, pre-trained with the COCO (Common Objects in Context) dataset.

The Tiny YOLO VOC mobile-friendly model is lightweight, meaning lower latency for real-time processing, so is a great general-purpose framework. It works by splitting the image into a square grid, and then detecting items within those individual squares. Compared to other frameworks, YOLO was incredibly fast, hitting 200 FPS (frames per second) on a computer GPU. Unfortunately, however, on a phone CPU it was performing at a painfully slow 6 FPS… All the major frameworks have released mobile-friendly versions, but this still doesn’t mean that the real-time experience is going to be a smooth one.

In contrast to Google’s beast of a dataset, COCO has far fewer classes. But it has all the essentials. If all you want your computer to recognise is your dog (or any other “basic object”), COCO can get you there.

Bring out the test subjects!

So which of Alphero’s office dogs is the doggiest?

Not too shabby… We also discovered that there’s a 30.1% chance that Rocco might be a bird! We’ve always had our suspicions.

Where are we going with this?

Within the coming years, there’ll be a shift away from simple “object detection” to “instance segmentation”. That’s when things will get really exciting. Basically, we’ll get away from drawing bounding boxes around objects and start colouring within the lines instead.

Boxes are stupid anyway though, I’m probably a true believer in masks except I can’t get YOLO to learn them. Joseph Redmon, YOLOv3. Source CCO public domain

Segmenting instances of dogs on COCO. Source CCO public domain

This gives us a far more granular insight into what objects are within the image, combining semantic segmentation with object detection to cluster semantically similar pixels and then classify them. This separates each instance of an object, instead of lumping them all together.

Technology is cool, but not mature.

Liam De Grey, Developer, Alphero

When it comes to computer vision and machine learning, the result is only as good as what you put in. Some problems can be solved with something called transfer learning (TL), where we can train a model to apply their knowledge onto something similar. For example, what it takes to recognise a cat could also be applied to recognising small dogs. In the real world, we’ve used transfer learning for client projects; re-training an OCR credit card recognition model to scan information from driver licences instead.

It is still far more practical to start with a pre-trained computer vision model. It takes a shipload of source material to train a computer to recognise a dog from scratch and, at this stage, it’s just not commercially viable yet for the majority of developers and businesses. What we do have though is an awesome amount of computer vision tools to experiment with in the meantime, as we continue to cement our understanding of the underlying concepts and work towards real and reliable commercial applications.

Everyone’s still kind of figuring it out.