Part 1: Introduction (by Kieron, for the non-technical folks among us!)

In a world where all the talk is about large language models, it’s easy to forget about the other AI tools available to us. We are used to sharing images with ChatGPT or Claude and experiencing their ability to ascertain text and context from them, but specific vision models exist that can blow these out of the water. Well-trained specific models are increasingly revolutionising industries from manufacturing to healthcare.

Artiom has been investigating YOLO, an open-source machine learning framework that is targeted at object detection. This AI model allows for real-time analysis of both video and images against a set of example images, making it easy to create highly accurate AI tools based on your own area of expertise, from identifying plant species to optimising workforces.

An advantage of YOLO is that it delivers real-time performance whilst maintaining the scalability to run on many devices. More critically, leveraging tools like Roboflow has transformed what was once a highly complex domain, previously the preserve of computer vision specialists, into an accessible technology for all developers, project managers, and domain experts across sectors.

This investigation illuminates that, as technology progresses, breakthroughs often emerge not from the most sophisticated systems, but from those that solve real problems with practical elegance. In healthcare, manufacturing, and beyond, the future might belong to models that can detect, classify, and respond in milliseconds - not minutes of contemplation.

We’ll let Artiom explain what he found, how he tested it, and why it matters.

Part 2: Technical Deep Dive (by Artiom)

Honestly, when I first switched from Firebase ML Kit’s object detection, I figured it would just be more of the same - maybe a little better, but nothing dramatic. ML Kit’s object detection was fine for the basics, but I always felt like it missed a lot, and I was not happy with the accuracy.

Then I added the YOLO model to my test application to check how it would handle a video stream from a camera on the COCO dataset that includes 80 standard images - the accuracy was amazingly good (object detection accuracy was approximately 20% higher than that of the object recognition model from ML Kit). And it all happened very fast, in real-time.

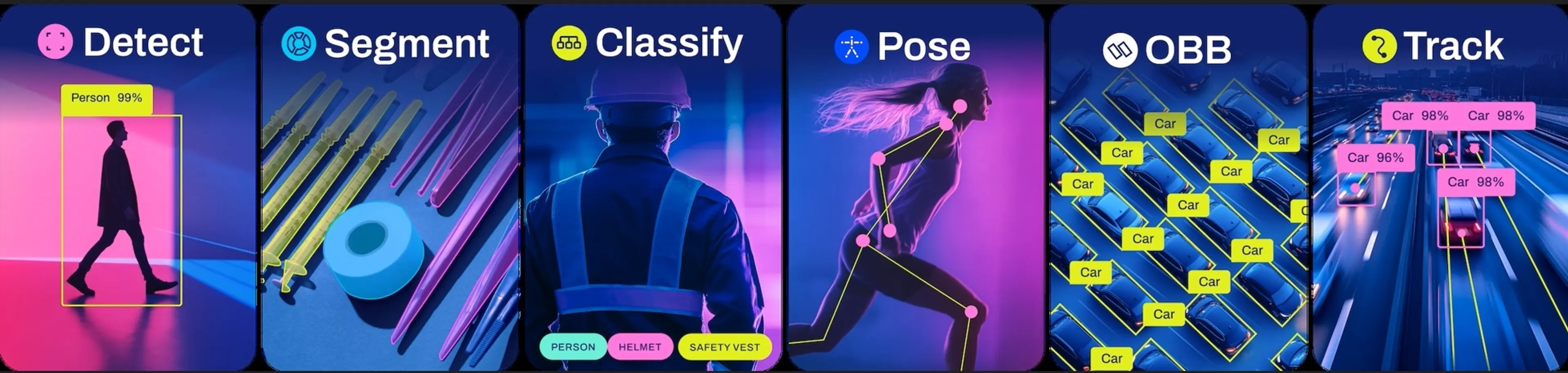

What’s even cooler is that, while I only played with object detection, YOLOv12 actually supports a bunch of other tasks—segmentation, oriented bounding boxes, even pose estimation and classification—so if I ever need to branch out, I know it’s all there. But honestly, just seeing object detection run this smoothly while maintaining a good accuracy was enough to make me rethink what’s possible on mobile.

What YOLO Does: Multi-Task Vision

Most computer vision models tend to do just one thing—maybe they’re good at object detection, or perhaps segmentation, but rarely both. YOLOv12 takes a different approach. It’s built as an all-in-one architecture that can handle a bunch of advanced vision tasks at the same time: object detection, segmentation, classification, and even pose estimation. What’s impressive is that it juggles all these jobs in real time, and it’s been tuned to work smoothly even on devices with limited power, like an average smartphone.

Beyond the hype: Language models can’t replace vision AI for object detection

While large language models (LLMs) like ChatGPT have shown impressive capabilities in understanding and generating human language, they are not designed for object detection, segmentation, or other image-based computer vision tasks. LLMs are fundamentally trained to process and generate text, not to analyze pixel data or extract visual information from images.

Attempting to use an LLM for tasks like object detection or segmentation can lead to unpredictable and unreliable results, often with very low accuracy, because in most cases these models are not designed to recognize objects within images or to perform fine-grained visual tasks.

In contrast, models like YOLOv12 are purpose-built for computer vision. They can detect, segment, and classify objects in real time, delivering high accuracy and reliable performance even in complex scenes. YOLOv12 and similar models are optimized to process visual information efficiently, making them suitable for deployment on everything from powerful servers to mobile devices.

In summary, while LLMs are valuable for supporting computer vision workflows—such as generating code, explaining concepts, or assisting with data annotation—they are not a replacement for specialized vision models (at least at the moment) when it comes to actual image understanding. For real-time, accurate object detection and segmentation, dedicated vision models like YOLOv12 remain the gold standard.

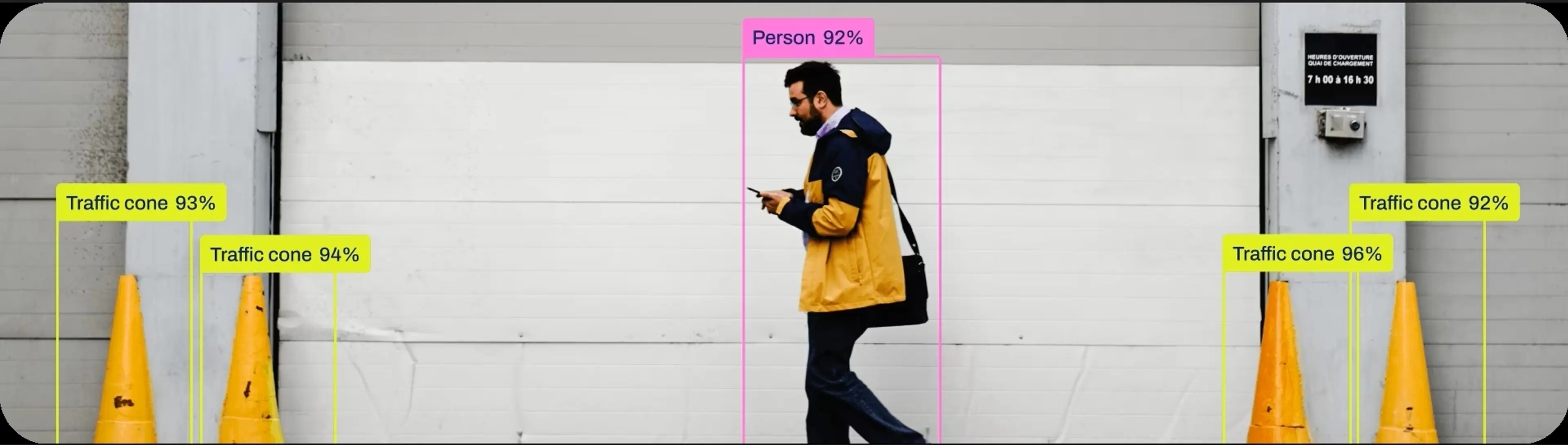

Object Detection

Object detection goes beyond just recognising what’s in a photo—it spots and labels everything in the image, drawing boxes around each object so you know exactly where they are. So instead of just telling you “there’s a dog here,” it will point out the dog, the car, the person, and anything else that shows up, all in one shot.

What sets YOLOv12 apart is the way it processes the whole image at once. It chops the image up into a grid, and then for each piece, it predicts which objects are present, where they are, and how confident it is—all in a single pass through its neural network. That’s why it’s so fast and precise, making it possible to do real-time object detection on live video, whether it’s for robotics, security cameras, or even self-driving cars.

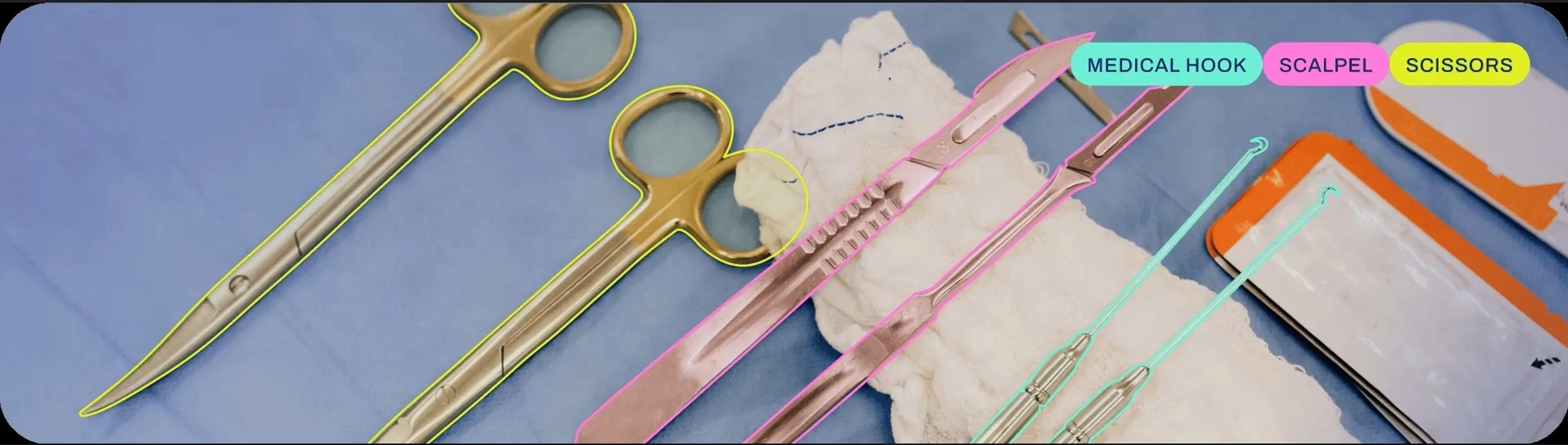

Segmentation

If you’ve ever tried to work with images, you know that just drawing boxes around objects is only part of the story. Segmentation steps things up: instead of simple rectangles, it actually traces the exact outline of every object, labelling each pixel so you know precisely what’s what—even when objects overlap or are packed close together.

What’s nice about YOLOv12 is that it gives you instance segmentation straight out of the box. In practice, that means it can tell the difference between every single object in a scene, even if things are cluttered or chaotic. And since segmentation and detection are both baked into the same model, you get a much deeper, more useful understanding of each image or video frame—all at speeds that are fast enough for real-time use. Whether you’re trying to analyse medical scans, help a self-driving car make sense of the road, or just pull apart the action in a live video feed, it’s hard to beat this kind of accuracy and efficiency.

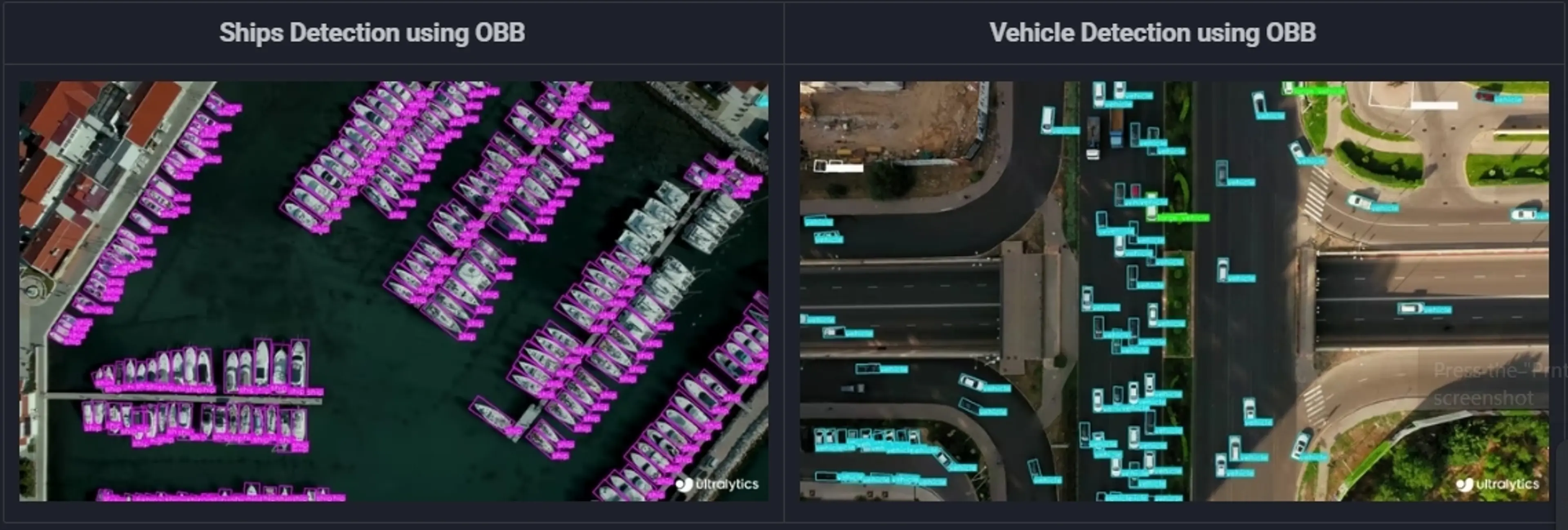

Oriented Bounding Boxes

The classic way of finding objects in images is to draw regular rectangles around them. That works fine if everything’s straight and tidy, but in the real world, stuff gets messy—signs are crooked, containers get stacked at odd angles, and aerial photos seldom line up perfectly. Those standard boxes can end up grabbing way more background than you want or missing the object’s true shape.

Oriented bounding boxes (OBBs) solve this by letting the detection box rotate and fit the object’s real angle. So, whether it’s slanted text, a tipped-over crate, or a plane on the tarmac, the model’s frame hugs the object much more tightly. This makes the detection far more precise, especially when things aren’t nicely lined up, and is super handy for applications like aerial imagery or any situation where objects show up at odd angles. It’s super useful in the manufacturing space to help orient objects for processing.

Classification

Image classification is really just about figuring out what the main thing in a picture is. The model looks at the whole photo and decides what label fits best—maybe it’s a dog, a bike, or a mountain. It doesn’t bother with the details of where the object is or what shape it has. It’s a super fast way to get the gist of what’s in an image, which comes in handy when you need to sort or search through a big batch of photos, or whenever you only care about the main subject and not all the little details. This is similar to what you would get back from an LLM but with greater certainty and control over functions and settings.

Pose Estimation

Pose estimation isn’t just about noticing there’s a person in a photo—it actually maps out where all the important body parts are, like elbows, knees, and shoulders. By connecting these points, you can see exactly how someone is standing, sitting, or moving around. It’s a big deal in things like sports analytics (to break down an athlete’s form), animation, fitness apps, or any situation where understanding body movement really makes a difference.

How focused vision models enable smarter, easier deployment

Honestly, what I like about YOLOv12 is that it saves you from all the usual headaches of computer vision projects. Normally, you’d be patching together three or four different types of models—one for detection, something else for tracking, maybe yet another for classification. With YOLOv12, it’s all baked in. You don’t have to think about it—just pick your model, plug it in, and get moving.

This kind of integration isn’t just a “nice-to-have”—it’s a huge advantage if you care about real-time performance. Everything runs fast and smooth, even on stuff like a basic mobile phone.

One more thing that I like: you can update your models on the fly, on both Android and iOS, and your users get the latest improvements without the need to have a new app release. It’s also worth mentioning that you can have, for example, small, medium, and large versions of models to achieve perfect balance between the accuracy and performance on devices that differ greatly in hardware.

Roboflow & Google Colab: Lowering the barriers to entry

For the labelling part, I went with Roboflow. It was a lifesaver—super easy to use. The drag-and-drop interface just works, and there’s this massive collection of public datasets (Roboflow Universe). Also, once you add any images to the Roboflow project, you’ll get suggestions with similar images that you can add to this project. Roboflow supports multiple ways to label images, including basic box labelling, polygon-based labelling, and even auto-labelling that helps to label thousands of images in a matter of minutes. Once I was done labelling, exporting was painless; you get a data set that you can use for model training.

For training, I moved the whole project over to Google Colab. It’s just the most hassle-free option for me—there’s no need to worry about my own hardware. I like that you can quickly grab a beefy GPU runtime, usually a T4 or an A100, and there are plenty of other hardware options available. I used Python for training YOLOv12, and Colab made the whole process smooth—just fire up a notebook, run the code, and you’re off to the races.

Mobile app integration

For the mobile side, I focused on Android and went with TensorFlow Lite. The setup’s pretty straightforward: the app just loads up the TFLite model, preps each input image as a bitmap, and runs everything right there on the device. You get back the coordinates for the boxes, the confidence scores, and the labels for whatever it finds. Honestly, it’s fast—even on middle-of-the-road Android phones, real-time detection wasn’t a problem.

I didn’t do the iOS part this time, but you can use TFLite models on iOS as well. That said, if you want the best performance on Apple devices, you’re better off converting the model to CoreML so you can take advantage of the Neural Engine chips in modern devices.

All in all, getting YOLO running on Android this way felt solid. The integration was clean, quick, and straightforward.

Trade-offs and considerations

YOLOv12 is seriously fast and flexible, but like anything else, it’s not perfect for every single job.

First, all models are only as good as your training data. If your images aren’t labeled well, or your dataset isn’t varied enough, don’t be surprised if you get spotty results. Spend time making sure your dataset is broad and covers all the edge cases you care about—it pays off.

Also, if you’re after absolute top-shelf accuracy—like spotting tiny, similar, overlapping objects, or catching super subtle details—YOLOv12 probably isn’t your best bet. There are models out there, like Mask DINO, that you can combine with powerful feature extractors (like Dinov2), that can squeeze out more precision, especially for pixel-perfect segmentation work. But there’s a catch: those models need way more computing power, often running only on beefy servers with high-end GPUs, making it almost impossible to achieve real-time performance.

For stuff where you absolutely need pixel-level outlines—think medical scans or industrial quality checks—you’ll want to look at more specialized segmentation models. Just be ready for slower speeds and heavier hardware requirements.

Licensing

Just a heads-up on licensing: YOLOv12 uses AGPL-3.0, so if you’re planning to publish your mobile app to the app store, you’ll either need to make your app’s source code public or look into buying a commercial license. If you want to keep your code private, you can’t just use it as-is for a closed-source app. Worth keeping in mind before you launch.

Free vs. paid tools

Honestly, the open-source license on YOLOv12 is a lifesaver if you’re messing around with side projects or research.

And look, YOLOv12 is just one option. If you want something that would not require buying a license for a production application and you can accept that it will not give you the best accuracy, you can decide to use the Object Detection library from Firebase ML code.

However, if you’re really chasing after the best detection accuracy, check out models like Mask Dino with decent feature extractors like DINOv2, but keep in mind that you’ll need a server for that, and it’s not likely you can achieve real-time performance.

What did the team say?

I got feedback from all everyone: R&D, developers, designers, project managers and clients. Not surprisingly, everyone cared about different stuff. Here’s what stood out to everyone:

Project Managers: “Real-time model updates mean less waiting around and no app size headaches. We just pick the right model for the phone and move on.”

Developers: “We’ve got a YOLOv12 model ready for almost anything, so we don’t have to reinvent the wheel every time.”

Designers: “It’s wild seeing YOLOv12 run this smoothly on any phone—it finally lets us design whatever we want, no matter the device.”

If you’re curious about using YOLOv12 in a real-world setting—or want help thinking through how computer vision might solve a problem in your product or service—get in touch.

We love a prototype, and we’re happy to share more details (or a live demo) anytime.